Project Name: maps

Type of quota increase requested: disk

Reason: Setting up a user supported OSMdb mirror

Per T285668 the current WMF maintained OSMdb that is available to Cloud VPS projects is out of date. My queries show it is at best at the state from half a year ago, with possible history gaps before that.

In that ticket a DB hosted within the maps project was suggested. This ticket takes admins up on that proposal :-). I'd be happy to give this a shot and I'd try to find some accomplices on wiki.

I suspect that I'm the #1 user of this DB anyways! I'm creating tiles for the WikiMiniAtlas, both server-side rendered and JSON tiles with client-side rendering at high resolutions. If I had responsibility for the DB server I could make sure that my tools work with it properly (and I'd have to feel the pain if they cause issues - ...if that isn't a great motivator, I don't know).

So, here it goes...

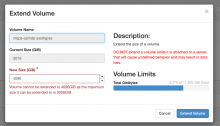

I request a disk quota increase of 3TB for a volume to store a OSM database replica.

Attachement: on-demand rendered WMA tiles in the few weeks: