This task is the application microtask for T302237.

Overview

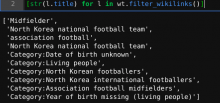

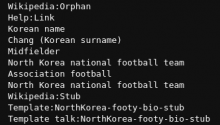

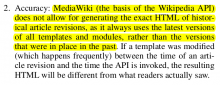

For this task, you're being asked to complete this notebook that explores the difference between two formats of Wikipedia articles (the raw wikitext and parsed HTML): https://public.paws.wmcloud.org/User:Isaac_(WMF)/Outreachy%20Summer%202022/Wikipedia_HTML_Dumps.ipynb

Using your knowledge of Python and data analysis, do your best to complete the notebook. Imagine that your audience for the notebook is people who are new to Wikimedia data analysis (very possibly like you before starting this task) and provide lots of details to explain what you are doing and seeing in the data.

The full Outreachy project will involve more comprehensive coding than what is being asked for here with support from your mentors (and some opportunities for additional explorations as desired). This task will introduce some of the basic concepts and give us a sense of your Python skills, how well you work with new data, documentation of your code, and description of your thinking and results. We are not expecting perfection -- just do your best and explain what you're doing and why! For inspiration, see this example of working with Wikipedia edit tag data and Python library created by a past Outreachy participant.

Set-up

- Make sure that you can login to the PAWS service with your wiki account: https://paws.wmflabs.org/paws/hub

- Using this notebook as a starting point, create your own notebook (see these instructions for forking the notebook to start with) and complete the functions / analyses. All PAWS notebooks have the option of generating a public link, which can be shared back so that we can evaluate what you did. Use a mixture of code cells and markdown to document what you find and your thoughts.

- As you have questions, feel free to add comments to this task (and please don't hesitate to answer other applicant's questions if you can help).

- If you feel you have completed your notebook, you may request feedback and we will provide high-level feedback on what is good and what is missing. To do so, send an email to both of the mentor (mgerlach@wikimedia.org and isaac@wikimedia.org) with the link to your public PAWS notebook. We will try to make time to give this feedback once to anyone who would like it.

- When you feel you are happy with your notebook, you should include the public link in your final Outreachy project application as a recorded contribution. We encourage you to record contributions as you go as well to track progress.