TASK AUTO-GENERATED by Nagios/Icinga RAID event handler

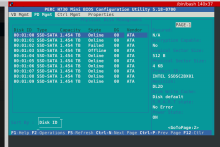

A degraded RAID (megacli) was detected on host cloudvirt1018. An automatic snapshot of the current RAID status is attached below.

Please sync with the service owner to find the appropriate time window before actually replacing any failed hardware.

CRITICAL: 1 failed LD(s) (Degraded) $ sudo /usr/local/lib/nagios/plugins/get_raid_status_megacli Failed to execute '['/usr/lib/nagios/plugins/check_nrpe', '-4', '-H', 'cloudvirt1018', '-c', 'get_raid_status_megacli']': RETCODE: 2 STDOUT: CHECK_NRPE STATE CRITICAL: Socket timeout after 10 seconds. STDERR: None