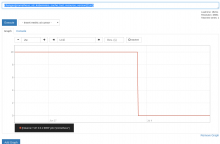

Reported by @akosiaris, looks like one of the two prometheus@k8s instances stopped refreshing deployments and thus lost metrics for newer containers. Judging by changes(prometheus_sd_kubernetes_cache_last_resource_version[11m]) it happened on Jul 03 at 8:30 UTC on prometheus1003.

Description

Description

Details

Details

| Subject | Repo | Branch | Lines +/- | |

|---|---|---|---|---|

| prometheus: alert when k8s cache isn't updating | operations/puppet | production | +11 -0 |

Event Timeline

Comment Actions

Change 521275 had a related patch set uploaded (by Filippo Giunchedi; owner: Filippo Giunchedi):

[operations/puppet@production] prometheus: alert when k8s cache isn't updating

Comment Actions

Likely related, prometheus logs after the drop

2019-06-04T14:59:02 prometheus1003 INFO level=warn ts=2019-06-04T14:59:02.041586097Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:320: watch of *v1.Pod ended with: too old resource version: 120950322 (120953723)"  2019-06-04T14:59:02 prometheus1003 INFO level=warn ts=2019-06-04T14:59:02.041605745Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:302: watch of *v1.Pod ended with: too old resource version: 120950322 (120953723)"  2019-06-04T14:59:02 prometheus1004 INFO level=warn ts=2019-06-04T14:59:02.041371638Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:320: watch of *v1.Pod ended with: too old resource version: 120950322 (120953723)"  2019-06-04T14:59:02 prometheus1004 INFO level=warn ts=2019-06-04T14:59:02.041347786Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:302: watch of *v1.Pod ended with: too old resource version: 120950322 (120953723)"  2019-06-04T14:59:01 prometheus1003 INFO level=warn ts=2019-06-04T14:59:01.30877735Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:320: watch of *v1.Pod ended with: too old resource version: 79189754 (79192259)"  2019-06-04T14:59:01 prometheus1004 INFO level=warn ts=2019-06-04T14:59:01.308788831Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:320: watch of *v1.Pod ended with: too old resource version: 79189754 (79192259)"  2019-06-04T14:59:01 prometheus1004 INFO level=warn ts=2019-06-04T14:59:01.308871629Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:302: watch of *v1.Pod ended with: too old resource version: 79189754 (79192259)"  2019-06-04T14:59:01 prometheus1003 INFO level=warn ts=2019-06-04T14:59:01.308733319Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:302: watch of *v1.Pod ended with: too old resource version: 79189754 (79192259)"  2019-06-04T14:58:47 prometheus1004 INFO level=warn ts=2019-06-04T14:58:47.644963492Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:301: watch of *v1.Service ended with: too old resource version: 120928434 (120953654)"  2019-06-04T14:58:47 prometheus1003 INFO level=warn ts=2019-06-04T14:58:47.64518912Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:301: watch of *v1.Service ended with: too old resource version: 120928434 (120953654)"  2019-06-04T14:58:45 prometheus1004 INFO level=warn ts=2019-06-04T14:58:45.067150151Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:301: watch of *v1.Service ended with: too old resource version: 79189712 (79192206)"  2019-06-04T14:58:45 prometheus1003 INFO level=warn ts=2019-06-04T14:58:45.066914199Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:301: watch of *v1.Service ended with: too old resource version: 79189712 (79192206)"  2019-06-04T13:00:00 prometheus1003 INFO level=warn ts=2019-06-04T13:00:00.654816072Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:301: watch of *v1.Service ended with: too old resource version: 120067974 (120928434)"  2019-06-04T13:00:00 prometheus1004 INFO level=warn ts=2019-06-04T13:00:00.655999409Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:301: watch of *v1.Service ended with: too old resource version: 120067974 (120928434)"  2019-06-04T09:20:45 prometheus1003 INFO level=warn ts=2019-06-04T09:20:45.669318295Z caller=klog.go:86 component=k8s_client_runtime func=Warningf msg="github.com/prometheus/prometheus/discovery/kubernetes/kubernetes.go:320: watch of *v1.Pod ended with: too old resource version: 79051483 (79164509)"

Comment Actions

Change 521275 merged by Filippo Giunchedi:

[operations/puppet@production] prometheus: alert when k8s cache isn't updating

Comment Actions

Mentioned in SAL (#wikimedia-operations) [2019-07-08T15:59:38Z] <godog> bounce prometheus@k8s on prometheus200[34] - T227478

Comment Actions

That's correct, unfortunately we can't ATM, although one of features of Thanos would be to merge results so gaps like these would disappear in theory.