On cp1083, ats-tls crashed at at 2019-12-29 20:41 with the following message:

Dec 29 20:41:36 cp1083 traffic_server[200306]: Fatal: couldn't allocate 524288 bytes at alignment 4096 - insufficient memory

Dec 29 20:41:50 cp1083 traffic_manager[200292]: [Dec 29 20:41:50.248] {0x2abc5a3fda80} ERROR: wrote crash log to /srv/trafficserver/tls/var/log/crash-2019-12-29-204135.log

Dec 29 20:41:50 cp1083 traffic_manager[200292]: traffic_server: received signal 6 (Aborted)Some hours later, varnish-fe also crashed for the same reason:

Dec 30 07:52:58 cp1083 varnishd[2809]: Child (2842) died signal=6

Dec 30 07:52:58 cp1083 varnishd[2809]: Child (2842) Panic at: Mon, 30 Dec 2019 07:52:58 GMT

Assert error in mpl_alloc(), cache/cache_mempool.c line 80:

Condition((mi) != 0) not true.

version = varnish-5.1.3 revision NOGIT, vrt api = 6.0

ident = Linux,4.9.0-11-amd64,x86_64,-junix,-smalloc,-smalloc,-hcritbit,epoll

now = 2323463.229078 (mono), 1577692337.881260 (real)

Backtrace:

0x559fbf3ce5c4: /usr/sbin/varnishd(+0x4b5c4) [0x559fbf3ce5c4]

0x559fbf3ca6aa: /usr/sbin/varnishd(+0x476aa) [0x559fbf3ca6aa]

0x559fbf3cb241: /usr/sbin/varnishd(MPL_Get+0x181) [0x559fbf3cb241]

0x559fbf3d0525: /usr/sbin/varnishd(Req_New+0x75) [0x559fbf3d0525]

0x559fbf3a7cdd: /usr/sbin/varnishd(+0x24cdd) [0x559fbf3a7cdd]

0x559fbf3ea692: /usr/sbin/varnishd(+0x67692) [0x559fbf3ea692]

0x559fbf3eadab: /usr/sbin/varnishd(+0x67dab) [0x559fbf3eadab]

0x7fa924b964a4: /lib/x86_64-linux-gnu/libpthread.so.0(+0x74a4) [0x7fa924b964a4]

0x7fa9248d8d0f: /lib/x86_64-linux-gnu/libc.so.6(clone+0x3f) [0x7fa9248d8d0f]

errno = 12 (Cannot allocate memory)

thread = (cache-worker)

thr.req = (nil) {

},

thr.busyobj = (nil) {

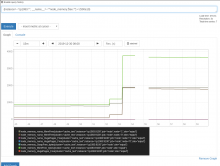

},This is surprising given that the memory utilization graph on grafana seems to indicate that there were some 80G available when ats-tls crashed, and a bit more than that when varnish-fe did.