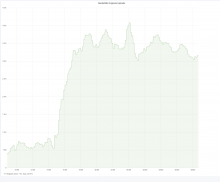

Since March 19 ~15 UTC we've been observing a 3x increase in hourly uploads to swift:

Looking at recent files it seems that a whole lot of uploads are from https://commons.wikimedia.org/wiki/User:DPLA_bot and https://commons.wikimedia.org/wiki/Special:Log/DPLA_bot specifically uploading books at one file per page, this was discovered because our alerts "too many uploads per hour" tripped.

A few questions I have:

- Is there a way to inspect upload progress from the bot? e.g. is the current batch about to finish? will it go on for much longer? total expected batch upload size?

- Is one file per page approach of upload books ok? It seems a whole lot of files on the commons side while we have multipage formats support (pdf, djvu, etc)

- Can we rate limit uploads from DPLA bot (or all bots in general?)

All of the above aim at guiding our (SRE) expectations in terms of capacity planning for media storage