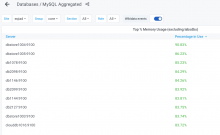

dbstore1004 seems to be having an excessive amount of memory consumption that keeps growing over the time until it is restarted. It has been done at least twice. Thanks to memory monitoring, this is under control, but it would be nice to understand if there is a clear underlying issue causing them.

Description

Details

| Subject | Repo | Branch | Lines +/- | |

|---|---|---|---|---|

| Reduce buffer pool memory for dbstore1004's mariadb instances | operations/puppet | production | +3 -3 |

| Status | Subtype | Assigned | Task | ||

|---|---|---|---|---|---|

| Declined | None | T270112 mariadb on dbstore hosts, and specifically dbstore1004, possible memory leaking | |||

| Resolved | BUG REPORT | BTullis | T290841 dbstore1007 is swapping heavilly, potentially soon killing mysql services due to OOM error |

Event Timeline

This is not a huge concern since we have memory monitoring T172490, but adding it here for tracking, so we can research at a later time if possible. Scheduling a restart for now.

Mentioned in SAL (#wikimedia-operations) [2020-12-16T11:10:09Z] <jynus> stopping and restarting dbstore1004 to mitigate (short term) T270112

Change 673849 had a related patch set uploaded (by Elukey; owner: Elukey):

[operations/puppet@production] Reduce buffer pool memory for dbstore1004's mariadb instances

Change 673849 merged by Elukey:

[operations/puppet@production] Reduce buffer pool memory for dbstore1004's mariadb instances

dbstore1004 is no more, and for dbstore1007 we have T290841: dbstore1007 is swapping heavilly, potentially soon killing mysql services due to OOM error

dbstore1003 and dbstore1005 look fine, closing this.