This is followup work from T348549: EXPLORE provisioning one small k8s VM per each test environment USING a single Cloud VPS project.

Current status:

- users can use catalyst to trigger the Openstack API to start a VM on Cloud VPS with a small k8s installed

Problems:

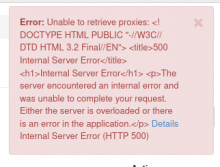

- during development, the Openstack API would at time timeout or return 500 errors

Options:

- would a persistent small k8s cluster to handle API requests be possible and maintainable?

- would a pool of warm VMs instantiated outside the user critical path lessen this issue? Or only move it around?

The output of this exploration story should be a proposal broken down into story size steps.