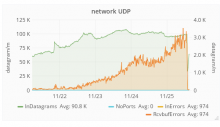

Noticed this via graphite "drops" alert, looks like cpjobqueue is sending an increasing amount of statsd traffic, clogging everything up:

U 10.64.48.29:51915 -> 10.64.32.155:8125 cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:243082|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms cpjobqueue.gc.minor:295770|ms