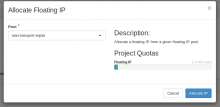

It has been detected that allocating a new floating IP address using horizon for the new eqiad1 deployment results in neutron allocating 3 different addresses, for example 185.15.56.13, 10.64.22.2, 10.64.22.3.

Only the first address belongs to the proper pool, the other two belong to the transport subnet.

So we suspect there is a misconfiguration somewhere and neutron doesn't know which pool to use for floating IPs.