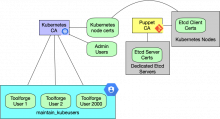

Right now, most k8s services, including etcd use puppet certs (which are valid x.509 certs that work with the puppetmaster itself as the CA). The problem with this is that puppet is not entirely designed to be a CA for other services so they cryptically suggest you not introduce SANs to certificates for clients (only for the puppetmaster).

As is evident in the subtasks of this, SANs (Alternate Names) are needed to make k8s consistent between DNS and the certs actually used.

It is also worth noting that client certs don't need to be issued by the same CA as long as the configs specified to the various services know which cacert to validate them against. This suggests that we could use puppet for client certs even if we chose to not using it for the server side certs (at the cost of some complexity).

Using puppet is somewhat attractive because we already have that infrastructure in place, and it distributes certs for us (or at least requires us to deal with its quirks already). Puppet certs do not help with user certs--x.509 certs can be distributed for user groups, and that seems like the kind of thing maintain-kubeusers would be great for with lots of changes. Turnkey solutions for PKI on Linux are interesting, but it may be just as well to use a script and maintain a "CA" on one instance or another. The kubernetes api-server can be a CA, but we are aiming for HA there.

There's enough here to initially gather tasks under and then merge them as we figure out what we are changing/fixing/solving/rejecting.