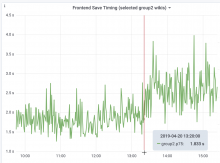

Backend saving timing went from ~750ms to ~850ms right after a MW train deploy.

19:41 marxarelli: dduvall@deploy1001 rebuilt and synchronized wikiversions files: group1 wikis to 1.33.0-wmf.24 19:35 marxarelli: starting promotion of 1.33.0-wmf.24 to group1

I'm also not sure why SAL and the grafana tags are off by so many minutes (it's not like it was a slow full-scap).

I also notice a previous bump around 2019-04-07 01:00:00 before that. No scap tags exists around then and SAL is empty.