The WMCS project gitlab-test hosts a instance called gitlab-ansible-test, which was used for pre-production testing of GitLab configuration changes. The instance was setup before the migration from Ansible to Puppet (see T283076). The project gitlab-test also contains a dedicated puppet host and a gitlab-puppet-test machine which was mostly used to test changes for the pupptisation of Ansible code (see T283076). This instance is out of date and has no public ip (because of quota limits).

We have to decide if a dedicated puppet host is needed and if needed, how we want to manage this.

The old instances and the puppet host should be replace by a new instance, which uses the same code as production GitLab (and if needed, a fresh puppet host)

So I see the following steps here:

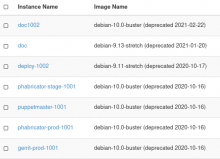

- cleanup old instances

- gitlab-ansible-test

- gitlab-puppet-test-7

- puppet-jelto-6

-

decide if a dedicated puppet host is needednot needed - create new gitlab-test instance (gitlab-prod-1001 in devtools project)

- review and adapt hiera data for gitlab role for WMCS (754063) (this one is done but some follow-ups, in progress)

- setup gitlab-test (now as gitlab-prod-1001.devtools) using puppet

- solve issue with installation of gitlab role

- gitlab-ce package installation fails because of postgres version (?)

- additional firewall rules for floating IP

- fix listing addresses for floating IP

- fix failing certbot runs

- migrate to wmcloud.org DNS zone

- add

gitlab.devtools.wmflabs.orggitlab.devtools.wmcloud.org to CAS-SSO - apply gitlab-setings

- document everything

[] automate creation of new ephemeral test instances see T302976