There is some maintenance work to be done to deflate the database of the Spanish edition of Wikimini.

The current size of the es version is 27G but this has not much sense since the it + en + fr is <= 8G and the es version is just a beta.

Small overview:

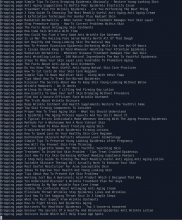

$ du -sh /var/lib/mysql/* | sort -hr | grep wikimini 27G /var/lib/mysql/wikimini_eswiki 5.1G /var/lib/mysql/wikimini_itwiki 2.8G /var/lib/mysql/wikimini_frwiki 2.1G /var/lib/mysql/wikimini_beta_frwiki 1.1G /var/lib/mysql/wikimini_stockwiki 930M /var/lib/mysql/wikimini_beta_stockwiki 192M /var/lib/mysql/wikimini_svwiki 157M /var/lib/mysql/wikimini_labwiki 30M /var/lib/mysql/wikimini_enwiki 16M /var/lib/mysql/wikimini_testwiki 8.3M /var/lib/mysql/wikimini_arwiki2 8.3M /var/lib/mysql/wikimini_arwiki