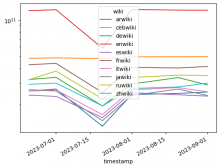

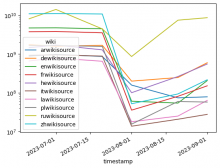

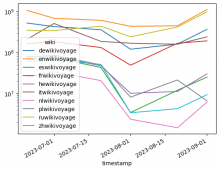

Looks like we are missing data in our eowiki namespace 0 dumps, we need to figure out the root cause. More information can be found here: https://meta.wikimedia.org/wiki/Talk:Wikimedia_Enterprise#Esperanto_(eowiki-NS0)_and_Aragonese_(anwiki-NS0)_Wikipedia_problem.

For the context: our dumps are mirrored to https://dumps.wikimedia.org/ twice a month, they can be found here https://dumps.wikimedia.org/other/enterprise_html/runs/.

Acceptance criteria

*Figure out the root cause

*Create a ticket for the solution (if the root cause was identified)

*Communicate the findings back to the Talk page

Developer Notes

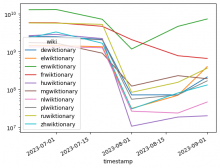

- Same issue showing up in enwiktionary:

file sizes from the most recent enwiktionary HTML dumps (NS0):

20230701: 13 GB

20230720: 7.1 GB

20230801: 1.1 GB

20230820: 4.6 GB

20230901: 7.2 GB

20230920: 3 GB

20231001: 5 GB

20231020: 2.9 GB

20231101: 3.0 GB

20231120: 3.2 GB

20231201: 3.5 GB

20231220: 3.8 GB

20240120: 9.6 GB

20240201: 9.6 GB

20240220: 9.6 GB

20240301: 9.6 GB

20240320: 10.0 GB

something's going really wrong there.