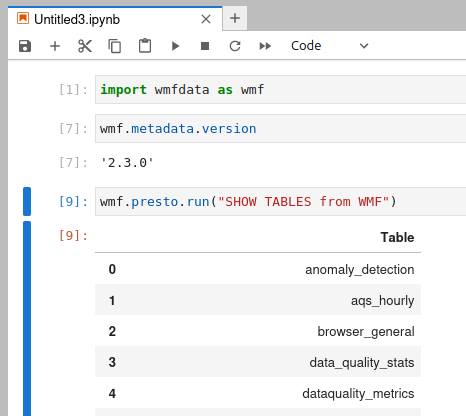

Currently, Wmfdata connects directly to an-coord1001.eqiad.wmnet. However, we should be connecting instead through the analytics-presto.eqiad.wmnet CNAME (T273642), so that Wmfdata will adapt seamlessly is the coordinator role is switched to a different server.

I tried just switching to the new host name, but that failed with CertificateError: hostname 'analytics-presto.eqiad.wmnet' doesn't match 'an-coord1001.eqiad.wmnet'.

The relevant code is in wmfdata-python/wmfdata/presto.py.