We have 15 Trusty instances each with 8 GBytes of RAM. Some jobs have been migrated to Nodepool trusty images already so we should be able to reclaim some of the instances.

They got spawned en masse back when we switched MediaWiki from PHP5.3 to PHP5.5 due to a surge of demand.

Seems we can drop at least half of them.

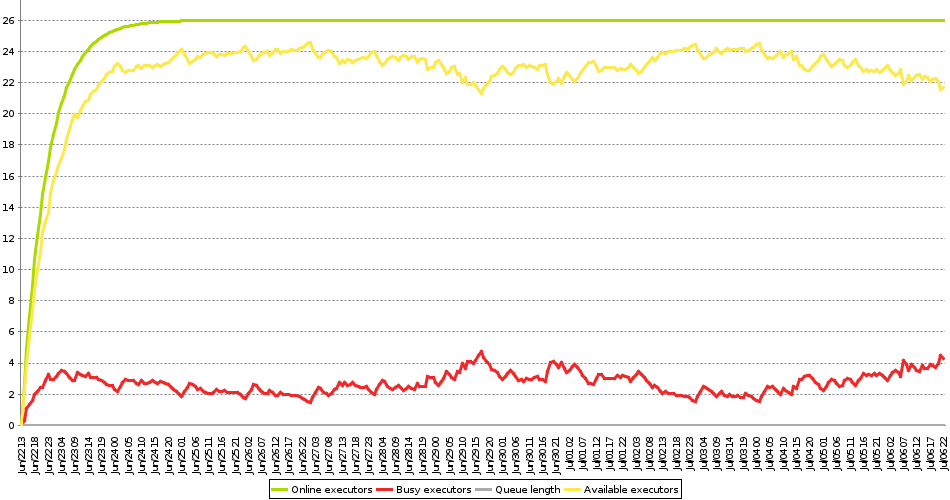

The Jenkins graph for executors business:

https://integration.wikimedia.org/ci/label/UbuntuTrusty/load-statistics?type=hour