Page Previews was enabled by default for all anons on another 100 wikis on Wednesday, 16th August between 1-2 PM UTC.

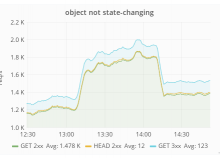

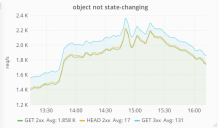

Soon after @fgiunchedi noticed a spike in the number of requests to Swift. Obviously, Page Previews will result in an increased number of requests for images so it seems likely that this spike is related to the deployment.

Is the spike damagingly high? How is cache_upload holding up under increased pressure? How does this feed back into the Page Previews deployment plan?