Umbrella task for registry improvements.

Description

Details

| Subject | Repo | Branch | Lines +/- | |

|---|---|---|---|---|

| Adding registries VMs. | operations/puppet | production | +11 -1 | |

| Introducing DNS entries for new docker-registries | operations/dns | master | +8 -3 |

Event Timeline

After looking into it a little bit, packaging harbor would be challenging. Harbor is a set of microservices published as containers. The installation and dev guide refers to docker-compose as a strong requirement for running harbor components, in order to run this docker-compose we need to build the container images and hosted them in our own docker registry which seems a sort of catch-22 problem (other people could rely on downloading it from DockerHub).

All containers images are built based on Photon, which is an RPM-based minimal container os that would also need to repackage. The easiest way to package it would be making a deb binary package of the offline installer, that would imply managing an almost 1G deb package and trusting images coming from the offline bundle both things don't sound great IMHO.

If we take a look at the list fo the benefits coming from setting up harbor I think we can achieve some of the features without getting ourselves in a complex setup.

If we just update the docker registry (Docker Distribution nowadays) version from 2.1 to 2.7-rc0 we will obtain easily:

- Prometheus exported metrics. (https://github.com/docker/distribution/releases/tag/v2.7.0-rc.0 https://github.com/docker/distribution/commit/e3c37a46e2529305ad6f5648abd6ab68c777820a )

- Garbage collection. (https://docs.docker.com/v17.09/registry/garbage-collection/#run-garbage-collection)

- Swift backend support, we were already using this and the support continues.

- HA setup. Giving that the docker-registry is actually writing blobs into Swift, we would only need to move the cache to some Redis instance (https://docs.docker.com/registry/configuration/#cache) and we will gain an HA setup where we can increase capacity just adding new registry servers.

Then from harbor we would only miss the following feature:

- LDAP authorization

- Notary integration

- Clair integration.

- Richer API

- Make the registry read-only so we can get rid of nginx trickery.

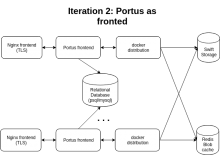

Except the notary integration the rest could be achieved using Portus as a Docker Distribution frontend:

- LDAP authorization and authentication. http://port.us.org/features/2_LDAP-support.html

- Clair integration. http://port.us.org/docs/How-to-setup-secure-registry.html

- Richer API http://port.us.org/docs/API.html

- Make the registry read-only so we can get rid of Nginx trickery. http://port.us.org/features/anonymous_browsing.html

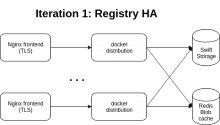

I would propose to upgrade Docker Distribution to 2.7 to get all the benefits I listed above and move the current setup to an HA one and when that is deployed and working we can plan the iteration 2 that would add Portus as a fronted.

What do you think? I would like some comments

I would say that this sounds like a better direction to go into, yes.

What we still miss is a clear idea of how we want our registry infrastructure to look like, if we take this direction. But that can be left to a later iteration, we can start understanding how complex it would be to set up portus on our current infrastructure.

Ouch.

All containers images are built based on Photon, which is an RPM-based minimal container os that would also need to repackage. The easiest way to package it would be making a deb binary package of the offline installer, that would imply managing an almost 1G deb package and trusting images coming from the offline bundle both things don't sound great IMHO.

OUCH!!!

If we take a look at the list fo the benefits coming from setting up harbor I think we can achieve some of the features without getting ourselves in a complex setup.

If we just update the docker registry (Docker Distribution nowadays) version from 2.1 to 2.7-rc0 we will obtain easily:

- Prometheus exported metrics. (https://github.com/docker/distribution/releases/tag/v2.7.0-rc.0 https://github.com/docker/distribution/commit/e3c37a46e2529305ad6f5648abd6ab68c777820a )

- Garbage collection. (https://docs.docker.com/v17.09/registry/garbage-collection/#run-garbage-collection)

- Swift backend support, we were already using this and the support continues.

- HA setup. Giving that the docker-registry is actually writing blobs into Swift, we would only need to move the cache to some Redis instance (https://docs.docker.com/registry/configuration/#cache) and we will gain an HA setup where we can increase capacity just adding new registry servers.

Then from harbor we would only miss the following feature:

- LDAP authorization

- Notary integration

- Clair integration.

- Richer API

- Make the registry read-only so we can get rid of nginx trickery.

Except the notary integration the rest could be achieved using Portus as a Docker Distribution frontend:

- LDAP authorization and authentication. http://port.us.org/features/2_LDAP-support.html

- Clair integration. http://port.us.org/docs/How-to-setup-secure-registry.html

- Richer API http://port.us.org/docs/API.html

- Make the registry read-only so we can get rid of Nginx trickery. http://port.us.org/features/anonymous_browsing.html

I would propose to upgrade Docker Distribution to 2.7 to get all the benefits I listed above and move the current setup to an HA one and when that is deployed and working we can plan the iteration 2 that would add Portus as a fronted.

What do you think? I would like some comments

Yeah that sounds like a sane plan forward. Let's go for that.

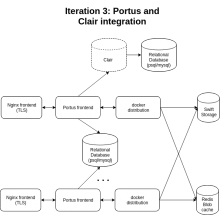

Regarding @Joe 's comment. The last picture should be something similar to this, this is quite complex and is build up from the idea that Portus is HA ( that means that the DB that Portus uses for state is resilient enough), in this scenario if Portus goes down we will have no registry.

I've also found out that the garbage collection task is quite intensive and should be done with the registry configured in read-only mode. From the Portus docs

Maintenance

The Garbage Collector (GC from now on) is a crucial part on this feature and it’s implemented by Docker Distribution. The bad thing is that it has been provided as a separate command. This means that administrators have to call this command explicitly instead of it being handled automatically for us. Moreover, in order to do it safely in production, some downtime is to be expected: you can run the GC anytime you want but it will bring concurrency issues if executed when some pushes were being performed. You have two ways to avoid concurrency problems:

If you are expecting the GC to be fast, then stopping the registry, running the GC and restarting the registry again should do the trick.

If you expect the GC to take a fair amount of time, then we recommend to restart your registry in read-only mode and perform GC then. Once GC is over, you can restart your registry again with read-only mode disabled.

As you can see, there is no way around this: you have to expect some downtime if you want to do some cleanup in your registry. One reasonable question for this situation is: how do I know whether the GC process is going to take a long time or not? There is no hard rule for it but our experience tells us that you will have to proceed with the second point above if you have quite some images stored in a cloud storage service like Amazon’s S3. That is, the main bottleneck is accessing your storage service, othewise GC should be fast.Despite this inconvenience in maintenance, running the GC is actually quite simple. You just have to call the registry command with the new garbage-collect command. It accepts one argument: the configuration file. Moreover, if you just want to check whether there are orphaned blobs or not, you can simulate the garbage collection by using the dry-run mode with the -d flag.

Clair is another service that should be resilient enough but is not as critical as Portus since if Clair goes down containers could still be fetched.

If we think about how to get there iteratively, these are the steps we should follow:

Our current setup is something like this

, i think a low hanging fruit will be make this setup more resilient, HA ready and with metrics.After that we can deploy Portus as frontend and integrate it with LDAP for pushes authentication.

Then the last step will be the diagram of the beginning. Integrating Clair.

Change 480732 had a related patch set uploaded (by Fsero; owner: Fsero):

[operations/dns@master] Introducing DNS entries for new docker-registries

Change 480732 merged by Fsero:

[operations/dns@master] Introducing DNS entries for new docker-registries

Change 480800 had a related patch set uploaded (by Fsero; owner: Fsero):

[operations/puppet@production] Adding registries VMs.

Keeping this task opened, but we can mark iteration 1 as completed with the exemption of using envoy for proxying between redis instances. Right now if the redis server goes down registry will go down because healthchecks will fail.

Setting up envoy as a redis proxy should help with this while doing maintenances.