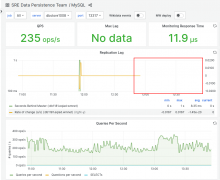

We need to configure dbstore1008 as a replacement for dbstore1003.

dbstore1003 is a MariaDB server containing replicas of mediawiki databases for analytics & research usage (mariadb::analytics_replica)

- DB section s1 (alias: mysql.s1)

- DB section s5 (alias: mysql.s5)

- DB section s7 (alias: mysql.s7)

These are unredacted MariaDB replicas supporting analytics use cases: https://wikitech.wikimedia.org/wiki/Analytics/Systems/MariaDB

Once the databases have been instantiated on the new host, we will need to change the DNS CNAME and SRV records.

Acceptance criteria

These three sections must be served from dbstore1008

- s1-analytics-replica.eqiad.wmnet

- s5-analytics-replica.eqiad.wmnet

- s7-analytics-replica.eqiad.wmnet

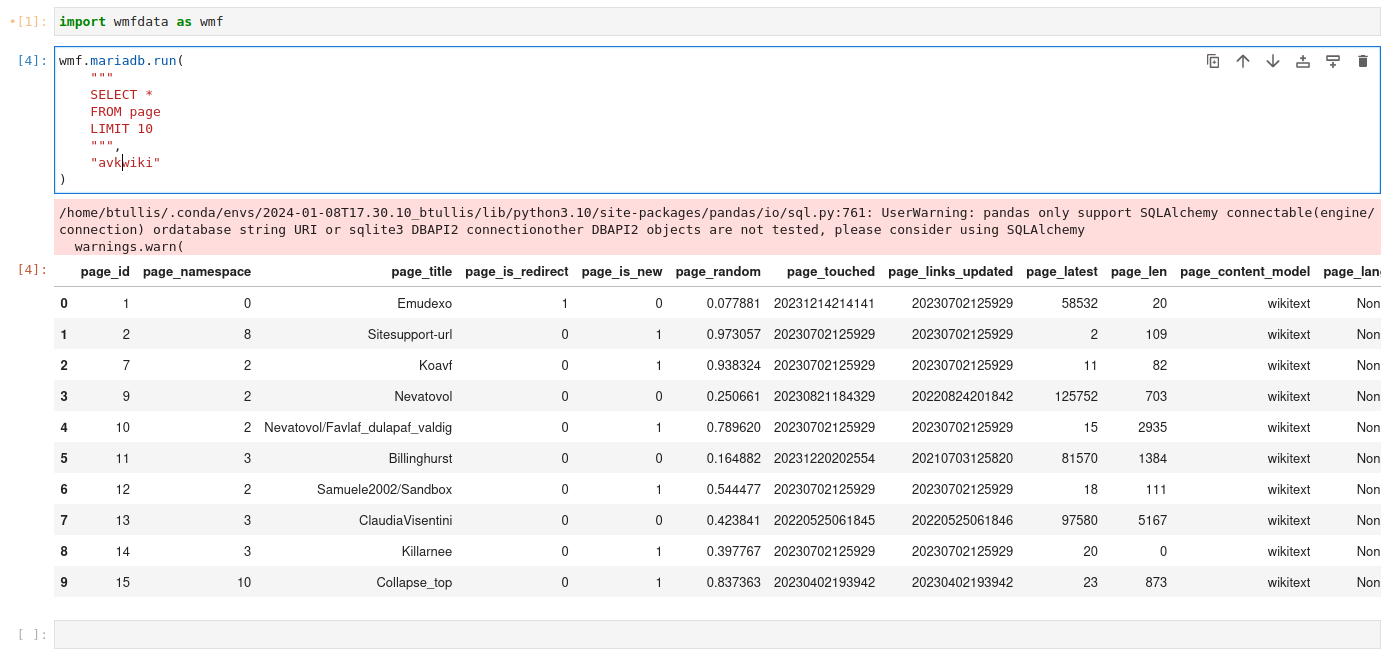

- The analytics-mysql script has been proven to work with the new server

- The wmfdata-python library has been proven to work with the new server. (It should, since it calls analytics-mysql)

n.b. dbstore1003 is currently at around 90% capacity on /srv so we should ensure that this work is done before long and that the new /srv volume on dbstore1008 is appropriately sized.