Propagating the lag of a wdqs host should only be done if this host is ''pooled'' (actually serving user traffic).

Determining the ''pooling'' status appeared to be quite challenging in our infra so in T336352 we started using a metric based on the query rate hoping that it would be a reasonably proxy for determining if the server is serving users or not.

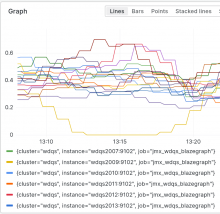

This worked well so far but a recent incident where a server was depooled after being stuck for some reasons showed that this metric based on query rate is too fragile:

We consider a server to be pooled if its query rate is above 1 qps:

rate(org_wikidata_query_rdf_blazegraph_filters_QueryEventSenderFilter_event_sender_filter_StartedQueries{}[10m]) > 1

Sadly this was not true on wdqs1013 when it was depooled, for some reasons its query rate was still above 1 (below 1.3). It is possible that this metric is polluted with monitoring queries that do not relate to serving user traffic. We should perhaps refine how we generate org_wikidata_query_rdf_blazegraph_filters_QueryEventSenderFilter_event_sender_filter_StartedQueries and make sure we only measure user queries.

AC:

- wdqs lag propagation should no longer include false positives (count the lag of a server that is actually depooled)