“I felt in need of a great pilgrimage, so I sat still for three days.” Hafiz

In Testing instrumentation on Special:Homepage (QA perspective) the general, step-by-step testing approach was described and Special:Homepage was used as a specific example.

Special:Homepage with the SE module is enabled by default for most of the new accounts on participating wikis, e.g. testwiki, cswiki, svwiki, or arwiki. On betacluster Special:Homepage is enabled not only on the mentioned wikis but also on enwiki. For existing user accounts Special:Homepage can be enabled via Special:Preferences option Newcomer homepage in User profile tab settings.

This post adds more details on QA process for error validation. Let's start with a story.

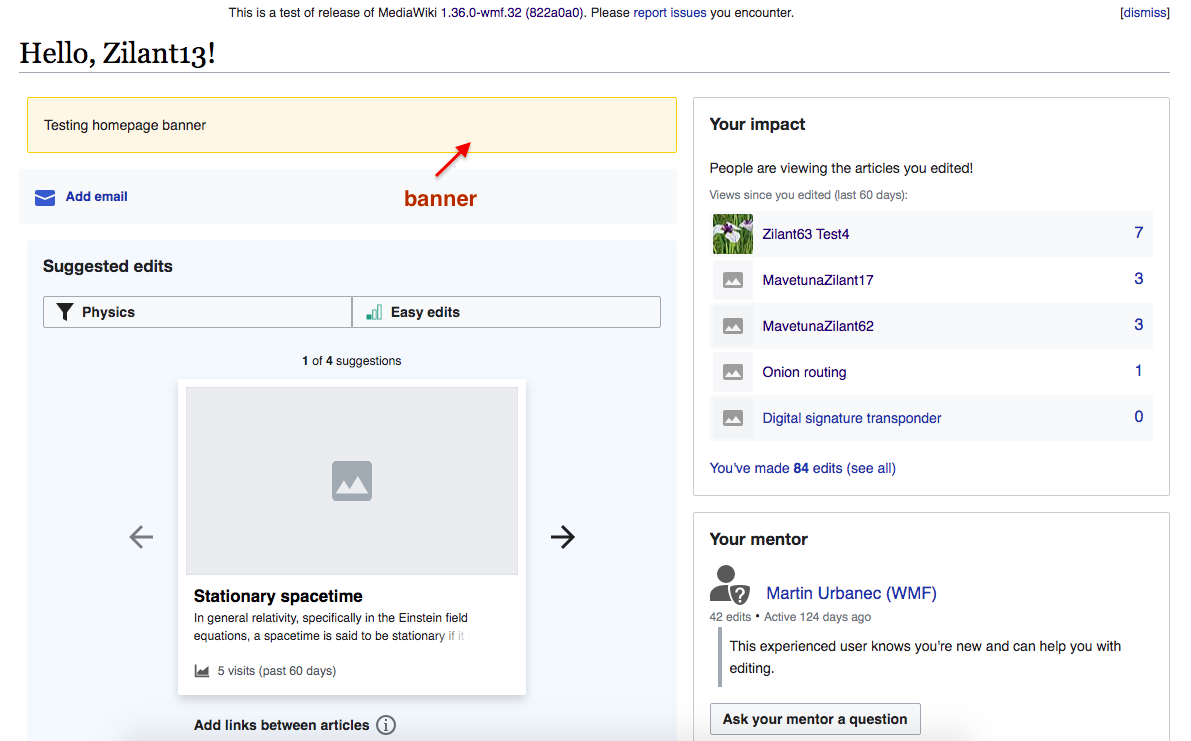

On the Special:Homepage a banner module was added to inform users visiting Special:Homepage on new community events or campaigns.

The data on users' banner clicks would provide valuable information on how Special:Homepage helps communities to increase users' participation. To measure how many users click on the banner, a new event was added to the HomepageModule schema.

However, when the banner module was deployed, the issue was reported - HomepageModule events with validation errors. Since the QA task was to verify whether the event - a user clicking on the banner - is correctly recorded, the question is - why validation errors were not caught during the testing?

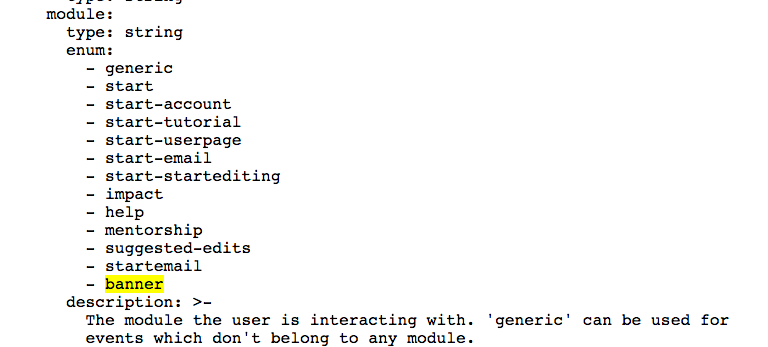

There are several places where errors (or overlooks) might happen. To minimize failures and ensure that events are recorded as expected, the testing should happen in stages. The first step is to check the schema itself - the HomepageModuleschema description page to see if the banner module, indeed, has been added and the expected actions - including link-click - are listed:

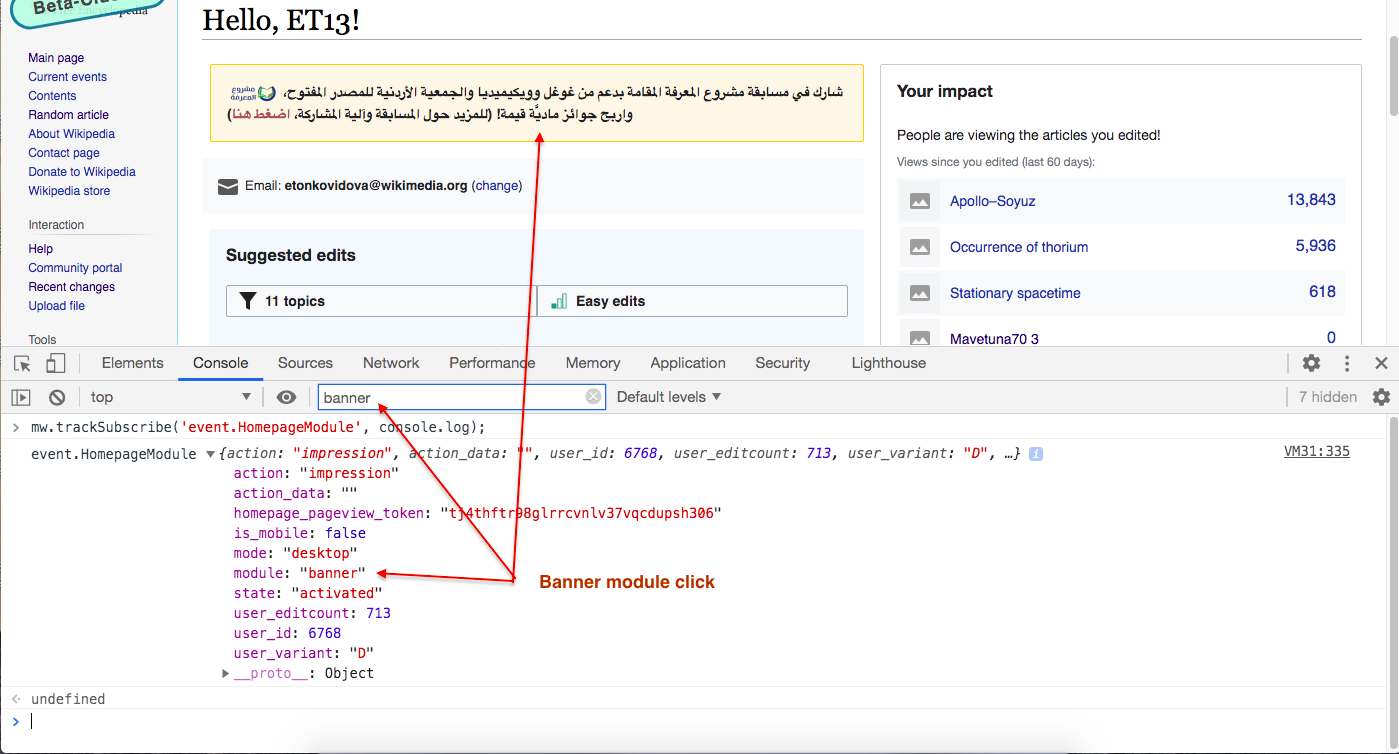

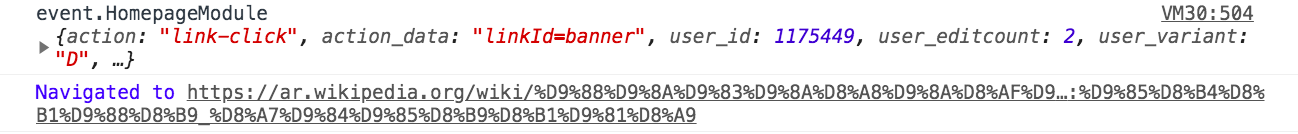

Then, the testing should start with triggering users' action - visiting Special:Homepage and clicking on the banner and see if the event will be recorded. There are couple of extremely helpful javascript snippets that can be entered into the Console and the events can be viewed.

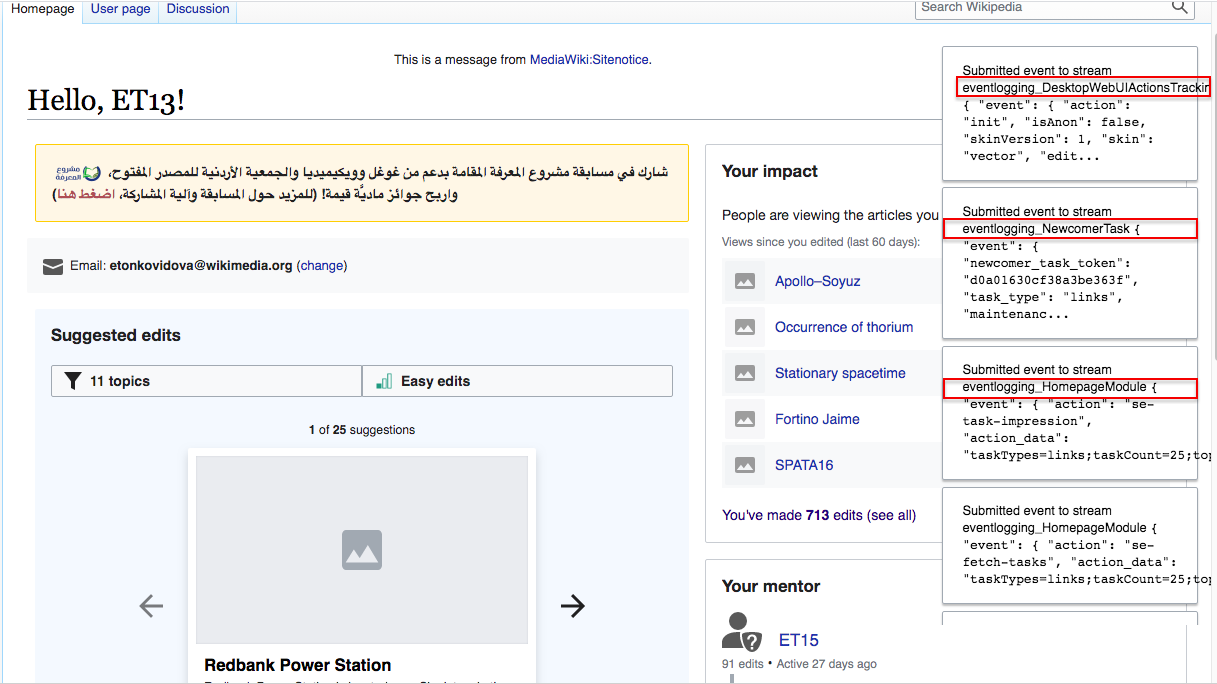

1. Showing all instrumentation schemas on a page (credits to @Catrope)

mw.loader.using( 'mediawiki.api' ).then( function () { new mw.Api().saveOption( 'eventlogging-display-web', '1' ); } );Here how it looks - Special:Homepage has few schemas:

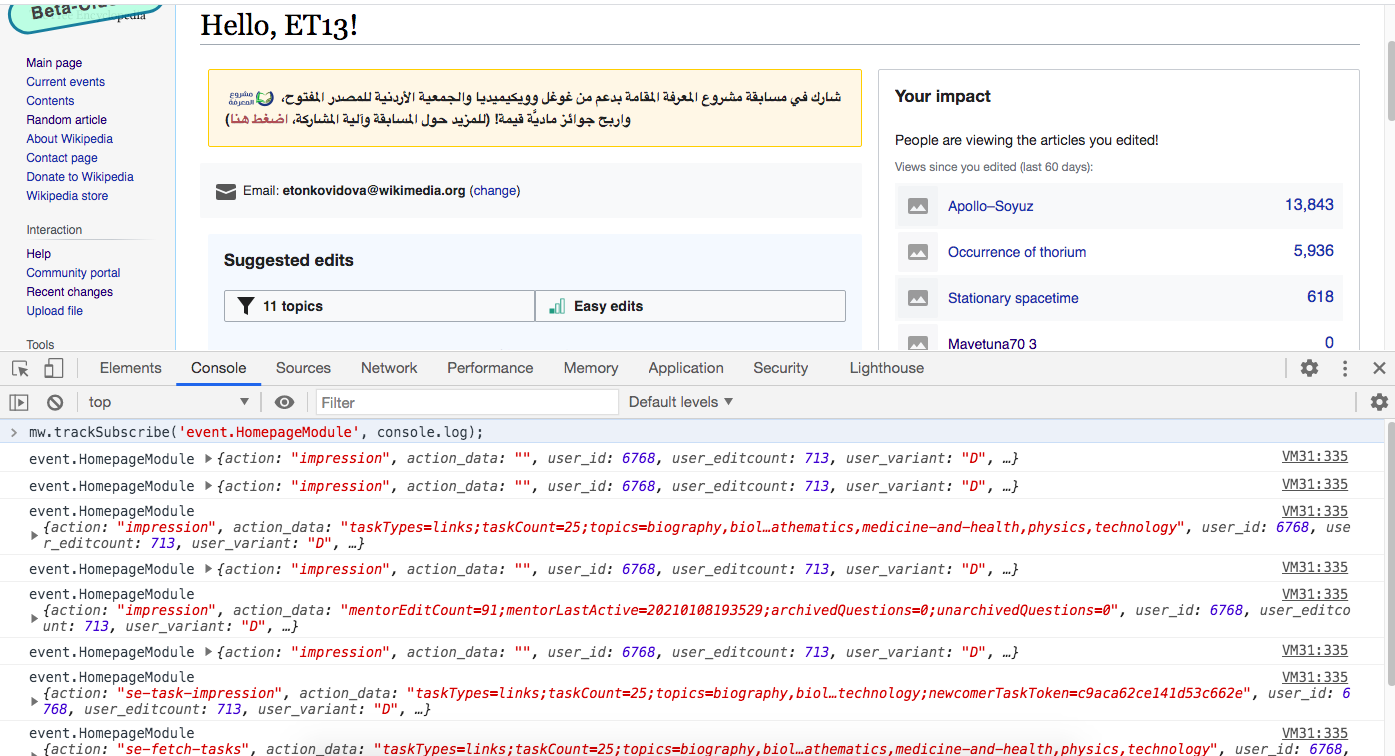

2. Showing HomepageModule schema events on a page (credits to @kostajh)

mw.trackSubscribe('event.HomepageModule', console.log);The HomepageModule schema events will be visible in the Console.

Persistent log checkbox should be checked, otherwise the event of clicking on the banner won't be present in the Console.

The banner related event(s) can be filtered and reviewed for completeness.

The front-end instrumentation testing for the new banner module is finished. Does it mean that the event validation is all good too? The front-end testing can be done reliably in betalabs but the validation happens differently and quite often cannot be seen from the front end. Here is a twist - presently betalabs does not have a convenient way to check for the eventlogging validation errors. To see the validation errors for the schemas only production logstash can present the full pictures for the eventlogging validation.

.

What is logstash? From the wikitech page

Logstash is a tool for managing events and logs. When used generically, the term encompasses a larger system of log collection, processing, storage and searching activities.

And from the following logstash introduction

Logstash is an open-source, centralized, events and logging manager. It is a part of the ELK (ElasticSearch, Logstash, Kibana) stack.

All the above sound really helpful for doing proper instrumentation QA, but the betalabs logstash presently is unable to provide any information on eventlogging validation errors. The only option to see what gets stored for betalabs eventlogging is to watch the following stream (and grep for getting the event info ) for the events that are being tested:

@deployment-eventlog05:~$ kafkacat -C -t eventlogging_HomepageModule -b deployment-kafka-jumbo-2.deployment-prep.eqiad.wmflabs:9092 -c 10 -o -10

As a result, the close monitoring the production logstash becomes really important. As soon as the deployment of updated schema is done to testwiki, the eventlogging testing should be rechecked to make sure that front-end actions are not resulted in validation errors. The logstash dashboard - eventgate-validation is a perfect reporting tool and should be checked during testing instrumentation on production.

Read more:

- Event Platform/Instrumentation How To - specifically, how to check eventlogging on betacluster,

- Diving into Wikipedia's ocean of errors - a developer's perspective on how to keep the level of reported errors in logstash manageable

- Wikimedia Product/Better use of data