Due to the changes of ItemId formatting on entity page, we need to be sure that we do not degrade performance (very much).

To track the performance we need to have performance test suite and run it every time we change the number of ItemIds rendered using "new code".

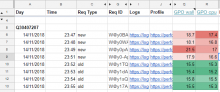

Performance analysis is done using jmeter config: https://phabricator.wikimedia.org/diffusion/EWBA/browse/master/jmeter/test_wd_entity_page_performance.jmx

For information how to run jMeter analysis refer to Readme: https://phabricator.wikimedia.org/diffusion/EWBA/browse/master/jmeter/README.md