From time to time, Gerrit is unable to access its database on m2-master.eqiad.wmnet. When establishing the connection, it apparently get lost unexpectedly. The connection attempt fails with:

Can not read response from server. Expected to read 4 bytes, read 0 bytes before connection was unexpectedly lost.

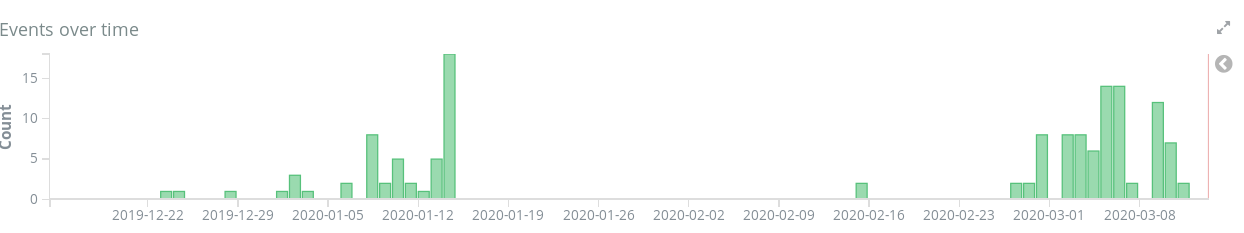

Logstash host:gerrit1001 AND "Cannot open ReviewDb" / https://logstash.wikimedia.org/goto/a5e73386bf3e1a2f0650577153e9d5d8

Gerrit parameters:

[database] type = mysql hostname = m2-master.eqiad.wmnet database = reviewdb username = gerrit url = jdbc:mysql://m2-master.eqiad.wmnet/reviewdb?characterSetResults=utf8&characterEncoding=utf8&connectionCollation=utf8_unicode_ci

I am thus wondering whether there are indications of some failure on the database side since we do not have much details on Gerrit side :-\

A java trace showing the error occuring when opening a connection:

[2020-03-05 08:09:15,471] [SshCommandStart-2] WARN com.google.gerrit.sshd.CommandFactoryProvider : Cannot start command "gerrit query --format json --all-approvals --comments --commit-message --current-patch-set --dependencies --files --patch-sets --submit-records change:577096" for user jenkins-bot

com.google.inject.ProvisionException: Unable to provision, see the following errors:

1) Cannot open ReviewDb

at com.google.gerrit.server.util.ThreadLocalRequestContext$1.provideReviewDb(ThreadLocalRequestContext.java:69) (via modules: com.google.gerrit.server.config.GerritGlobalModule -> com.google.gerrit.server.util.ThreadLocalRequestContext$1)

while locating com.google.gerrit.reviewdb.server.ReviewDb

for the 1st parameter of com.google.gerrit.server.query.change.OutputStreamQuery.<init>(OutputStreamQuery.java:106)

while locating com.google.gerrit.server.query.change.OutputStreamQuery

for field at com.google.gerrit.sshd.commands.Query.processor(Query.java:27)

while locating com.google.gerrit.sshd.commands.Query

while locating org.apache.sshd.server.Command annotated with CommandName[gerrit query]

1 error

at com.google.inject.internal.InternalProvisionException.toProvisionException(InternalProvisionException.java:226)

at com.google.inject.internal.InjectorImpl$1.get(InjectorImpl.java:1053)

at com.google.gerrit.sshd.DispatchCommand.start(DispatchCommand.java:95)

at com.google.gerrit.sshd.DispatchCommand.start(DispatchCommand.java:122)

at com.google.gerrit.sshd.CommandFactoryProvider$Trampoline.onStart(CommandFactoryProvider.java:208)

at com.google.gerrit.sshd.CommandFactoryProvider$Trampoline.access$300(CommandFactoryProvider.java:111)

at com.google.gerrit.sshd.CommandFactoryProvider$Trampoline$1.run(CommandFactoryProvider.java:167)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at com.google.gerrit.server.git.WorkQueue$Task.run(WorkQueue.java:558)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: com.google.gwtorm.server.OrmException: Cannot open database connection

at com.google.gwtorm.jdbc.Database.newConnection(Database.java:130)

at com.google.gwtorm.jdbc.JdbcSchema.<init>(JdbcSchema.java:43)

at com.google.gerrit.reviewdb.server.ReviewDb_Schema_GwtOrm$$13.<init>(Unknown Source)

at com.google.gerrit.reviewdb.server.ReviewDb_Schema_GwtOrm$$13_Factory_GwtOrm$$14.open(Unknown Source)

at com.google.gwtorm.jdbc.Database.open(Database.java:122)

at com.google.gerrit.server.schema.NotesMigrationSchemaFactory.open(NotesMigrationSchemaFactory.java:39)

at com.google.gerrit.server.schema.NotesMigrationSchemaFactory.open(NotesMigrationSchemaFactory.java:25)

at com.google.gerrit.server.config.RequestScopedReviewDbProvider.get(RequestScopedReviewDbProvider.java:46)

at com.google.gerrit.server.config.RequestScopedReviewDbProvider.get(RequestScopedReviewDbProvider.java:27)

at com.google.gerrit.server.util.ThreadLocalRequestContext$1.provideReviewDb(ThreadLocalRequestContext.java:69)

at com.google.gerrit.server.util.ThreadLocalRequestContext$1$$FastClassByGuice$$75e0eb90.invoke(<generated>)

at com.google.inject.internal.ProviderMethod$FastClassProviderMethod.doProvision(ProviderMethod.java:264)

at com.google.inject.internal.ProviderMethod.doProvision(ProviderMethod.java:173)

at com.google.inject.internal.InternalProviderInstanceBindingImpl$CyclicFactory.provision(InternalProviderInstanceBindingImpl.java:185)

at com.google.inject.internal.InternalProviderInstanceBindingImpl$CyclicFactory.get(InternalProviderInstanceBindingImpl.java:162)

at com.google.inject.internal.SingleParameterInjector.inject(SingleParameterInjector.java:42)

at com.google.inject.internal.SingleParameterInjector.getAll(SingleParameterInjector.java:65)

at com.google.inject.internal.ConstructorInjector.provision(ConstructorInjector.java:113)

at com.google.inject.internal.ConstructorInjector.construct(ConstructorInjector.java:91)

at com.google.inject.internal.ConstructorBindingImpl$Factory.get(ConstructorBindingImpl.java:306)

at com.google.inject.internal.SingleFieldInjector.inject(SingleFieldInjector.java:52)

at com.google.inject.internal.MembersInjectorImpl.injectMembers(MembersInjectorImpl.java:147)

at com.google.inject.internal.ConstructorInjector.provision(ConstructorInjector.java:124)

at com.google.inject.internal.ConstructorInjector.construct(ConstructorInjector.java:91)

at com.google.inject.internal.ConstructorBindingImpl$Factory.get(ConstructorBindingImpl.java:306)

at com.google.inject.internal.FactoryProxy.get(FactoryProxy.java:62)

at com.google.inject.internal.InjectorImpl$1.get(InjectorImpl.java:1050)

... 13 more

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

The last packet successfully received from the server was 3,474 milliseconds ago. The last packet sent successfully to the server was 0 milliseconds ago.

at sun.reflect.GeneratedConstructorAccessor582.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:418)

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989)

at com.mysql.jdbc.MysqlIO.reuseAndReadPacket(MysqlIO.java:3559)

at com.mysql.jdbc.MysqlIO.reuseAndReadPacket(MysqlIO.java:3459)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3900)

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:873)

at com.mysql.jdbc.MysqlIO.proceedHandshakeWithPluggableAuthentication(MysqlIO.java:1710)

at com.mysql.jdbc.MysqlIO.doHandshake(MysqlIO.java:1226)

at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2194)

at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2225)

at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2024)

at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:779)

at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)

at sun.reflect.GeneratedConstructorAccessor63.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.mysql.jdbc.Util.handleNewInstance(Util.java:418)

at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:389)

at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:330)

at com.google.gwtorm.jdbc.SimpleDataSource.getConnection(SimpleDataSource.java:104)

at com.google.gwtorm.jdbc.Database.newConnection(Database.java:128)

... 39 more

Caused by: java.io.EOFException: Can not read response from server. Expected to read 4 bytes, read 0 bytes before connection was unexpectedly lost.

at com.mysql.jdbc.MysqlIO.readFully(MysqlIO.java:3011)

at com.mysql.jdbc.MysqlIO.reuseAndReadPacket(MysqlIO.java:3469)

... 57 more