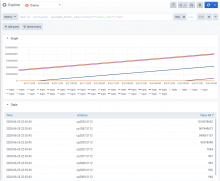

Looking across the fleet, the purged_event_lag metric looks really bad on four hosts: cp2033, cp1087, cp2037, cp2039.

These servers are now depooled, so there's no present user impact.

I stumbled across this after being forwarded some user reports on Twitter: https://twitter.com/john_overholt/status/1276276247602044933

The reporter was on the US East Coast (actually just a few miles from me), so I looked at eqiad:

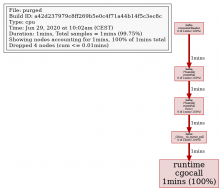

✔️ cdanis@cumin1001.eqiad.wmnet ~ 🕙🍺 sudo cumin 'A:cp-text and A:eqiad' 'curl -s --resolve en.wikipedia.org:443:127.0.0.1 https://en.wikipedia.org/wiki/Samuel_Osborne | grep Legacy||echo FAIL' 8 hosts will be targeted: cp[1075,1077,1079,1081,1083,1085,1087,1089].eqiad.wmnet Confirm to continue [y/n]? y ===== NODE GROUP ===== (7) cp[1075,1077,1079,1081,1083,1085,1089].eqiad.wmnet ----- OUTPUT of 'curl -s --resolv...egacy||echo FAIL' ----- <h3><span class="mw-headline" id="Legacy">Legacy</span><span class="mw-editsection"><span class="mw-editsection-bracket">[</span><a href="/w/index.php?title=Samuel_Osborne&action=edit&section=1" title=" Edit section: Legacy">edit</a><span class="mw-editsection-bracket">]</span></span></h3> ===== NODE GROUP ===== (1) cp1087.eqiad.wmnet ----- OUTPUT of 'curl -s --resolv...egacy||echo FAIL' ----- FAIL ================