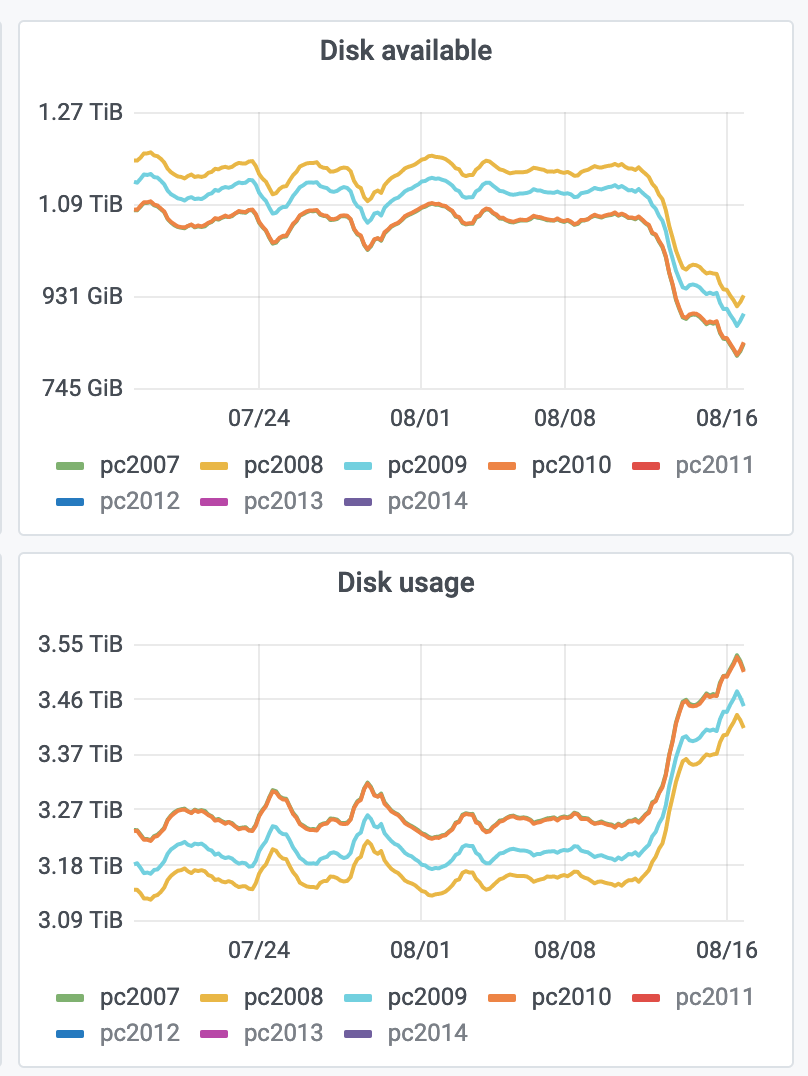

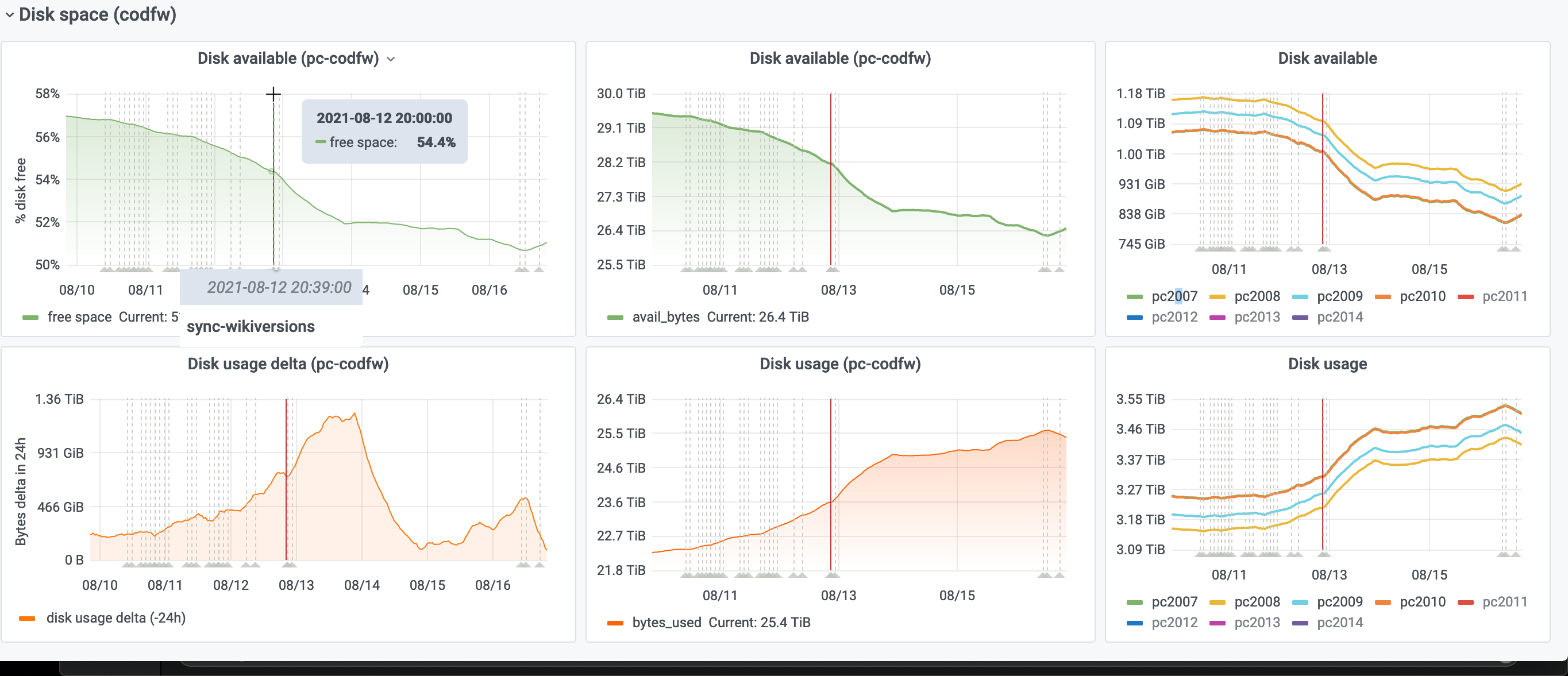

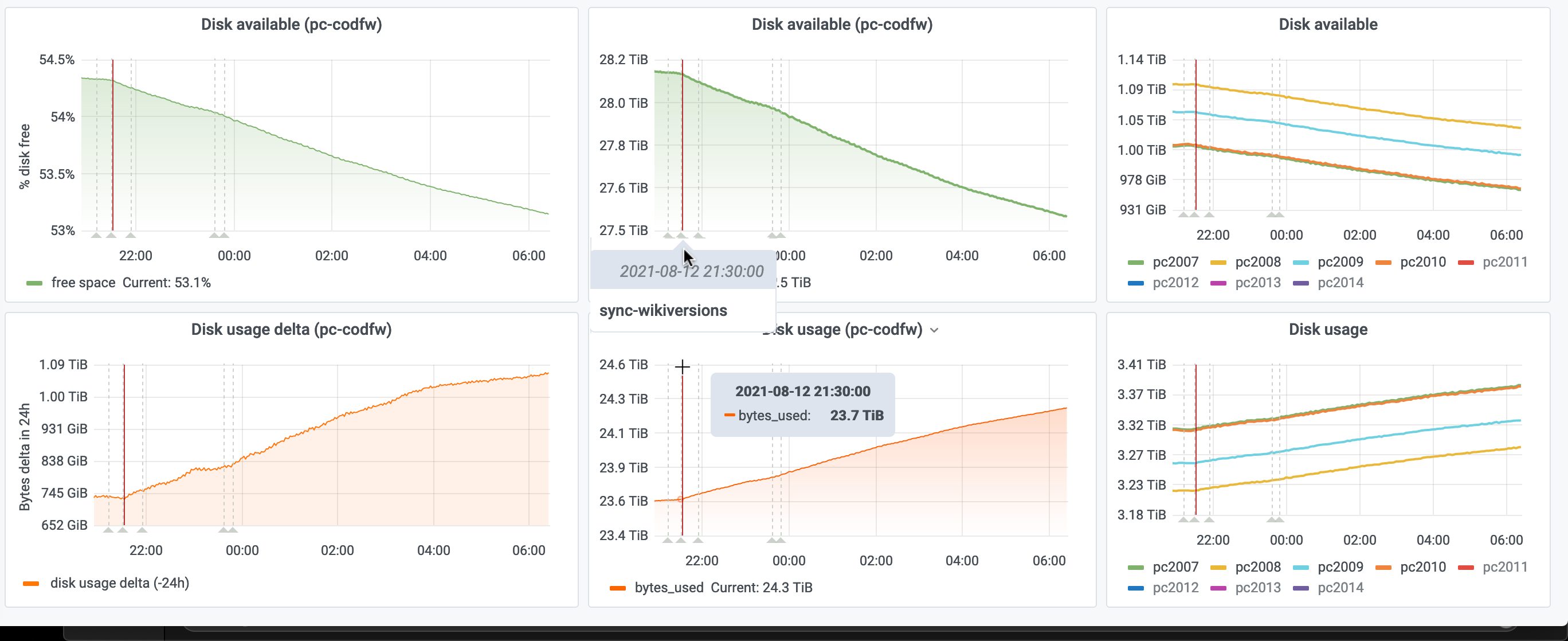

https://grafana.wikimedia.org/d/000000106/parser-cache?orgId=1&from=1626553020741&to=1629145020742

| Last 30 days |

|---|

| Last week |

|---|

| 12 Aug 2021 |

|---|

See also:

\cc @ppelberg and Editing-team. I don't suspect DiscussionTools at this time, but this is for your general awareness as a concerned third party around ParserCache at the moment (T280599, T280606). Though if your team does know of a potential cause relating to one of your features consuming more parser cache space as of last week, let us know here!