The output of the embedded user.tokens module changes on every request. I'm not entirely sure whether this was on purpose.

Traced it back to https://github.com/wikimedia/mediawiki/commit/b1e4006b44

rMWb1e4006b4401: Allow for time-limited tokens

This is a bit of a problem since we minify this module, and by default all minifications are cached at a key that is the hash of the unminified contents. We should implement a way to bypass the minification cache.

As such, we're creating a new cache key and populating it on every page view for every logged in user. And that key is never used again.

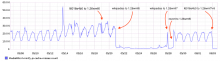

Example from localhost:

<script>...mw.loader.implement("user.tokens",function($,jQuery){mw.user.tokens.set({"editToken":"5da7f8da8cc2c12b40e63510d3b83f3754937d96+\\","patrolToken":"bc1e366f8f7806ed27d067667cdeebe554937d96+\\","watchToken":"c52e9fcc036b315fe8ba3e0135cb928d54937d96+\\"});},{},{},{});

/* cache key: alphawiki:resourceloader:filter:minify-js:7:aed9432283c8152b3eaff1761844e771 */