It was at 15m about three days ago. See https://en.wikipedia.org/wiki/Wikipedia:Village_pump_(technical)/Archive_136#Job_queue

Description

Details

Related Objects

- Mentioned In

- rMW914d71f3ccbc: Temporary hack to drain excess refreshLinks jobs

rMWba91f0a2d339: Temporary hack to drain excess refreshLinks jobs

rOMWCa9b6107f1494: Bumped the $wgJobBackoffThrottling refreshLinks limit

rMW2a13b5de3b46: Made triggerOpportunisticLinksUpdate() jobs make use of parser cache

rMW140a0bd562d4: Made triggerOpportunisticLinksUpdate() jobs make use of parser cache

rMW6ab262666970: Removed duplicated jobs in triggerOpportunisticLinksUpdate()

rMW187fd647232a: Made triggerOpportunisticLinksUpdate() jobs make use of parser cache

rOPUP2205fa2b5407: Increase jobrunner::runners_basic - Mentioned Here

- rMWba91f0a2d339: Temporary hack to drain excess refreshLinks jobs

Event Timeline

Apr 30 13:50:29 <Krenair> Someone just pointed out in tech that enwiki has a ridiculously large job queue at the moment Apr 30 16:44:53 <legoPanda> Betacommand: I see 10 million refreshlinks jobs??? Apr 30 21:49:58 <legoPanda> AaronSchulz: do you know why enwiki has 11m refreshLinks jobs queued?

May 07 16:25:20 <T13|mobile> [16:24:33] There's concerns that the enwp job queue is stuck since it's growing so much and pushing 20 million. Can someone peek and poke at it as needed? May 07 16:25:20 <T13|mobile> [16:25:03] <MatmaRex> T13|mobile: i'd wager this is fallout from last saturday, when someone accidentally disabled the job queue May 07 16:32:03 <T13|mobile> "jobs": 19977207 May 07 16:32:58 <MatmaRex> T13|mobile: just to reassure you, the job queue is (probably) working again, it was broken only for a short while May 07 16:35:14 <legoktm> T13|mobile: looks like they're all refreshLinks jobs May 07 16:37:00 <MatmaRex> T13|mobile: some jobs actually generate more jobs when executed :D May 07 16:38:40 <legoktm> well, it's executing jobs May 07 16:40:36 <MatmaRex> T13|mobile: for example: (simplifying, since i don't know exactly how it works) say you edit a template used on 200 000 pages. rather than generate 20 000 jobs to update the pages immediately, which itself would take a long time, MediaWiki instead generates (say) 100 jobs, each of which generates 2000 jobs, each of which actually updates a page. May 07 16:45:22 <legoktm> T13|mobile: don't complain about job queue length when you're the one who made it so long! :P May 07 17:01:23 <manybubbles> oh my that is a lot of jobs May 07 18:15:52 <T13|away> legoktm: would my guess that part of the reason the jobqueue is still ever expanding might be related to SULF? May 07 18:39:18 <T13|away> [18:15:52] legoktm: would my guess that part of the reason the jobqueue is still ever expanding might be related to SULF? May 08 18:16:16 <Betacommand> Are ops aware of the enwiki job queue issue? May 08 18:19:45 <Glaisher> "jobs": 21894746, May 08 18:20:38 <Krenair> the other job types seem relatively low

I did a quick look around the job runners, and they seem to be running fine without being starved of resources. A point of note is that it apparently is only the refreshLinks that are piling up.

Change 209719 had a related patch set uploaded (by Aaron Schulz):

Increase jobrunner::runners_basic

Change 209852 had a related patch set uploaded (by Aaron Schulz):

Made triggerOpportunisticLinksUpdate() jobs make use of parser cache

Change 209877 had a related patch set uploaded (by Aaron Schulz):

Removed duplicated jobs in triggerOpportunisticLinksUpdate()

Now past 25.6m. I made edits to templates as far back as April 19th that haven't filtered through to the articles yet.

Change 209852 merged by jenkins-bot:

Made triggerOpportunisticLinksUpdate() jobs make use of parser cache

Change 210243 had a related patch set uploaded (by Aaron Schulz):

Made triggerOpportunisticLinksUpdate() jobs make use of parser cache

Change 210244 had a related patch set uploaded (by Aaron Schulz):

Made triggerOpportunisticLinksUpdate() jobs make use of parser cache

Change 209877 merged by jenkins-bot:

Removed duplicated jobs in triggerOpportunisticLinksUpdate()

Change 210244 merged by jenkins-bot:

Made triggerOpportunisticLinksUpdate() jobs make use of parser cache

Change 210243 merged by jenkins-bot:

Made triggerOpportunisticLinksUpdate() jobs make use of parser cache

Change 210246 had a related patch set uploaded (by Aaron Schulz):

Bumped the $wgJobBackoffThrottling refreshLinks limit

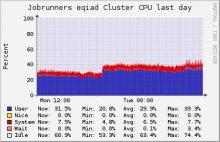

Well, for sure job runners are working harder now:

Queues on most wikis approach 0 or are in the thousands. s1 databases don't seem to have suffered from the bump, or at least there's no visible change in the graphs other than a brief (unrelated?) jump in mysql_innodb_buffer_pool_pages_dirty https://ganglia.wikimedia.org/latest/?r=day&cs=&ce=&m=cpu_report&tab=ch&vn=&hide-hf=false&hreg[]=db10%2852|51|55|57|65|66|72|73%29

"mwscript showJobs.php enwiki --group" shows it as still going up

Edit: And not long after I said that I looked again and it had gone down. Okay then...

Change 210611 had a related patch set uploaded (by Aaron Schulz):

Temporary hack to drain excess refreshLinks jobs

Change 210610 had a related patch set uploaded (by Aaron Schulz):

Temporary hack to drain excess refreshLinks jobs

Change 210610 merged by jenkins-bot:

Temporary hack to drain excess refreshLinks jobs

Change 210611 merged by jenkins-bot:

Temporary hack to drain excess refreshLinks jobs

Now en.wiki is merely at 21 millions. According to https://wikiapiary.com/wiki/Wikipedia_%28en%29 , it started dropping this morning at 8 UTC; at this speed, the queue should be drained in a matter of hours.

15 million. Checking https://en.wikipedia.org/w/api.php?action=query&meta=siteinfo&siprop=statistics let's see what it looks like tomorrow morning.

@ArielGlenn how are you coming up with 78 jobs? I haven't seen it go below 1.15 million. I still consider it done, but it's still a little higher than normal according to the graphs.

And, for what it's worth, still template edits from back as far as April 19th that haven't filtered through. I don't know what order it works through the jobs, but I would have assumed oldest to newest.

@Mlaffs You would have assumed very wrong... From my understanding, the job queue is not a linear, easy to follow thing. Changing a template with 50K transclusions does not mean 50K jobs will be added in any specific order. It actually creates a job, that creates jobs based on a bunch of different factors and variables and then runs through those jobs to decide what jobs need jobs and what order to do them in. Then, once it finishes one of the jobs that decides what jobs to run, it creates more jobs to see if the jobs are actually done or if they need to be run again and it makes more jobs based on that including a job that reorders all the jobs... Or, something like that...

@Technical, I was watching the estimate provided at the en wp link I mentioned above. Given that it's only an estimate but still.

And, for what it's worth, still template edits from back as far as April 19th that haven't filtered through. I don't know what order it works through the jobs

If you check the graph at https://wikiapiary.com/wiki/Wikipedia_%28en%29 for exact timings, you'll see that 27 millions jobs were consumed in just 19 hours; that's probably the effect of rMWba91f0a2d339 which skipped certain "redundant" jobs. This bug was resolved when the abnormal mass of jobs has been removed.

In the next 24 hours the decrease was about 600k, so we're back to business as usual even though there is still some backlog to recover.

Is it possible to change the DESCRIPTION at the top of this page?

See https://en.wikipedia.org/wiki/Wikipedia:Village_pump_(technical)/Archive_136#Job_queue

Yes, click the edit task button in the top right hand corner, @Wbm1058.

Current large job queue types, as of a few minutes ago:

| ParsoidCacheUpdateJobOnDependencyChange | 10688 |

| refreshLinks | 65786 |

| cirrusSearchLinksUpdate | 113788 |

| RestbaseUpdateJobOnDependencyChange | 91488 |