Most of the required parts for this are already in place but @Peter and I found it somewhat untrustworthy.

Some metrics are missing, some are transformed to relative offsets between two points, some are aggregated, some zero values are ignored in questionable ways. It's hard to get a complete picture.

Being able to render a graph like this would be quite valuable as well.

And to have the dashboard feature sub-views for individual browsers because the overall picture is somewhat skewed as it is based on medians of individual properties. It does not represent the experience of any individual user (per se).

High-level plan

From T104902#3253227, and T104902#3746563:

- Server will not filter out zero values.

- Server will not exclude values based on an upper bound. – T104902#3746563

- Server will apply remaining filters to entire events, not individual values. If one of the values is invalid, discard the event as a whole.

- Server will record values to Graphite as relative to fetchStart, instead of navigationStart.

- Server will introduce new metrics for deltas, to make stacking more accurate. (e.g. "dns", "request", "response", etc. in addition to dnsStart/End, requestStart/End, etc.)

- (Last step) Clean up client-side code to only send what's needed for navtiming2.

frontend.navtiming2 issues

- Stacked values don't match the total from loadEventEnd – T178479

- "dns" is usually zero? – T178479#3699302

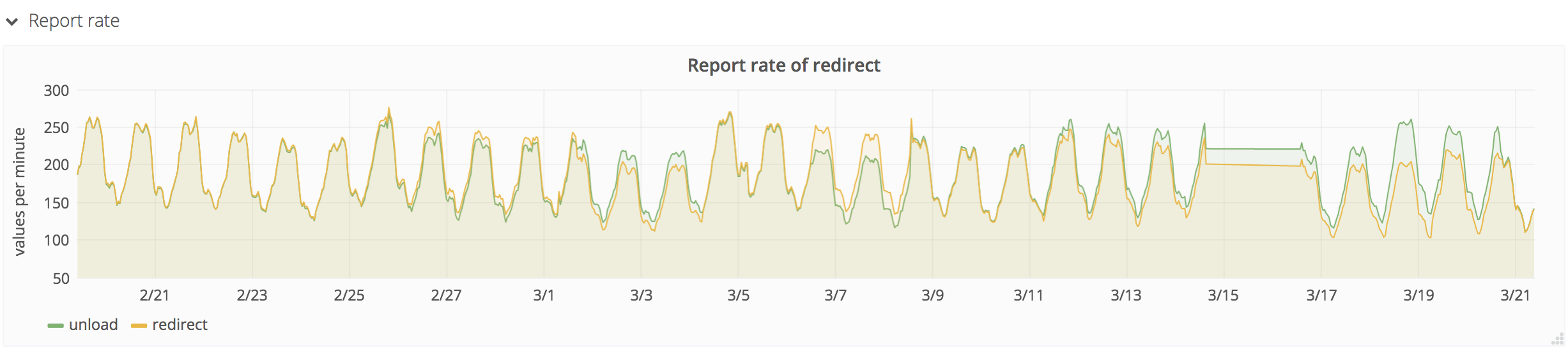

- "unload" correctness – T178479

- "redirecting" correctness – T178479

- Make sure mediaWikiLoadEnd or mediaWikiLoadComplete is working - T180598