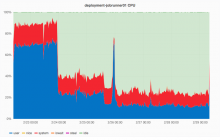

The beta cluster job queue is un monitored. In statsd we are missing the equivalent of MediaWiki.jobqueue.size, should be BetaMediaWiki.jobqueue.size but it does not show up.

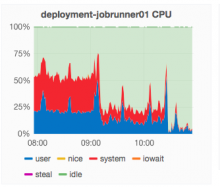

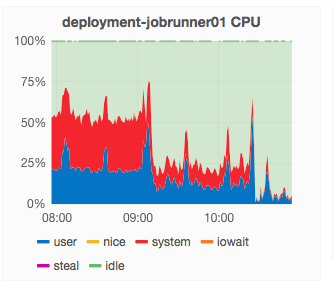

We have two job runners:

deployment-jobrunner01

deployment-tmh01

The later being solely for video transcoding afaik.

Looking at the labs monitoring dashboard https://grafana.wikimedia.org/dashboard/db/labs-project-board the instance can probably receive more load:

AFTER T130184 got fixed

Or we can spawn a second instance