Having host syslogs indexed and searchable in ELK would be useful for general troubleshooting, reporting, etc. across the fleet.

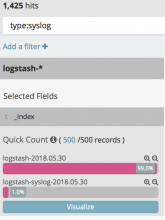

We have a logstash syslog listener and filters in place today, along with rsyslog configurations applied to certain host classes which are sending specific log types to logstash via syslog. Network device syslogs are being indexed as well.

It would be great to broaden the config. Ideally, to include the host syslogs that are being aggregated by syslog.(codfw|eqiad).wmnet

A few things to figure out (for starters)

- Capacity - How much headroom is there for new log sources in the current ELK infrastructure?

- Retention - How long before these indices should be deleted?

- Transport - It would be ideal to ship over TLS. Also basic queueing/redelivery would be nice to have, something to help prevent gaps.