Problems:

- cloudnet100[34] are both experiencing the same transient NIC issues. WMCS already did their homework on this, and found others having the same issue with this firmware version and kernel version.

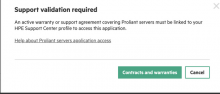

- they attempted to flash firmware updates, but it actually only updated kernel packages, as the actual firmware update has to come from HP software/flashing. Basically they've done all they could do before escalating this to DC Ops.

- HP systems can only remotely flash bios and ilom, with any raid/nic/psu/etc firmware updates requiring the service pack iso image be loaded via usb.

Solution:

- Either Chris or John will need to create the HP SP bootable image on USB, then flash both of these systems.

- Please note that one system is active, and the other standby. Please ping Arturo (nic arturo) in IRC when you are ready to do this work and he can let you know which is the standby server.

- The standby server will have its firmware updated first and then Arturo can fail over to it (once we think its stable) and the second system can be updated.

initial filing of issue

On Jan 3rd 2021 we got a page that there was 100% packet loss, it was a momentary hiccup.

Relevant dmesg: https://phabricator.wikimedia.org/P13635

journal for the +- 10 min of the issue: https://phabricator.wikimedia.org/P13636

The network is back up and running, so this is to investigate later and/or keep track in case it happens again.