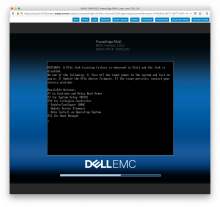

I detected this when rebooting the server:

Enumerating Boot options... Enumerating Boot options... Done UEFI0067: A PCIe link training failure is observed in Slot1 and the link is disabled. Do one of the following: 1) Turn off the input power to the system and turn on again. 2) Update the PCIe device firmware. If the issue persists, contact your service provider. Available Actions: F1 to Continue and Retry Boot Order F2 for System Setup (BIOS) F10 for Lifecycle Controller - Enable/Configure iDRAC - Update or Backup/Restore Server Firmware - Help Install an Operating System F11 for Boot Manager

The server wont boot.

DC Ops troubleshooting

I've not seen this, but unless it happens twice it doesn't count! So, steps to fix:

cloudvirt1038 is located in D5:U14

- onsite unplugs all power, fully depowering the system. Then plug back in after 15-30 seconds and attempt to boot the system. - did not fix error

- update firmware: idrac, bios, network card (only item on pcie bus) - did not fix error

- - open task with dell support, have them dispatch a tech (see note below)

tech note: This error will require some troubleshooting with the network card, the PCIe riser, and the mainboard. This can be done by our own on-sites, or we can leverage our warranty coverage to have Dell send out a technician. Trying to do this via self dispatch will be cumbersome, as its unclear where to start. The options are to have an on-site self dispatch parts and work with Dell support via email to determine what parts and how to fix, or to have an on-site call into dell support and schedule a dell technician to come out and work on this in warranty host to determine what part is wrong.

When the system is booting successfully again, please change the 'failed' state in netbox back to 'active'.