In T338805, we containerized the content translation recommendation-api, in this task we shall host this container as a second step in the process of migrating it to Lift Wing.

Description

Details

Event Timeline

Change 932810 had a related patch set uploaded (by Kevin Bazira; author: Kevin Bazira):

[research/recommendation-api@master] Set up production and test images for the recommendation-api migration

Change 935880 had a related patch set uploaded (by Kevin Bazira; author: Kevin Bazira):

[integration/config@master] recommendation-api: add pipelines for recommendation-api-ng

Change 935881 had a related patch set uploaded (by Kevin Bazira; author: Kevin Bazira):

[integration/config@master] recommendation-api: add pipelines for recommendation-api-ng

Change 935881 abandoned by Kevin Bazira:

[integration/config@master] recommendation-api: add pipelines for recommendation-api-ng

Reason:

Fixed this in: https://gerrit.wikimedia.org/r/c/integration/config/+/935880/

Change 935880 merged by jenkins-bot:

[integration/config@master] recommendation-api: add pipelines for recommendation-api-ng

Mentioned in SAL (#wikimedia-releng) [2023-07-10T08:02:26Z] <hashar> Reloaded Zuul for https://gerrit.wikimedia.org/r/935880 "recommendation-api: add pipelines for recommendation-api-ng" T339890

The NodeJS recommendation-api already has entries to the CI pipeline (in jjb/project-pipelines.yaml and zuul/layout.yaml) that publish an image to the Wikimedia docker registry. To avoid naming conflicts, the CI pipelines for the Python recommendation-api that we are migrating have been suffixed with "-ng" as suggested in T293648#8981227.

Change 932810 merged by jenkins-bot:

[research/recommendation-api@master] Set up production and test images for the recommendation-api migration

After encountering issues with CI fetching files from the Wikimedia public datasets archive (T341582) and CI post-merge build failure due to an internal server error (T342084). Finally the recommendation-api image has been published to the Docker registry: https://docker-registry.wikimedia.org/wikimedia/research-recommendation-api/tags/. The next step is to deploy it onto LiftWing/k8s.

Change 948689 had a related patch set uploaded (by Kevin Bazira; author: Kevin Bazira):

[operations/deployment-charts@master] [WIP] Add Helm chart for the recommendation-api-ng

Change 948689 abandoned by Kevin Bazira:

[operations/deployment-charts@master] [WIP] Add Helm chart for the recommendation-api-ng

Reason:

The team decided to move on with a general chart for python web apps in https://gerrit.wikimedia.org/r/c/operations/deployment-charts/+/955584

Change 955018 had a related patch set uploaded (by Kevin Bazira; author: Kevin Bazira):

[operations/deployment-charts@master] ml-services: deployment settings for the recommendation-api-ng

Change 955018 merged by jenkins-bot:

[operations/deployment-charts@master] ml-services: deployment settings for the recommendation-api-ng

Deployment settings for the recommendation-api-ng have been merged but when we try to deploy on staging we get:

kevinbazira@deploy1002:/srv/deployment-charts/helmfile.d/ml-services/recommendation-api-ng$ helmfile -e ml-staging-codfw sync Affected releases are: main (wmf-stable/python-webapp) UPDATED skipping missing values file matching "values-main.yaml" helmfile.yaml: basePath=. Upgrading release=main, chart=wmf-stable/python-webapp Release "main" does not exist. Installing it now. FAILED RELEASES: NAME main in ./helmfile.yaml: failed processing release main: command "/usr/bin/helm3" exited with non-zero status: PATH: /usr/bin/helm3 ARGS: 0: helm3 (5 bytes) 1: upgrade (7 bytes) 2: --install (9 bytes) 3: --reset-values (14 bytes) 4: main (4 bytes) 5: wmf-stable/python-webapp (24 bytes) 6: --timeout (9 bytes) 7: 600s (4 bytes) 8: --atomic (8 bytes) 9: --namespace (11 bytes) 10: recommendation-api-ng (21 bytes) 11: --values (8 bytes) 12: /tmp/values022274232 (20 bytes) 13: --values (8 bytes) 14: /tmp/values620292791 (20 bytes) 15: --values (8 bytes) 16: /tmp/values748254890 (20 bytes) 17: --values (8 bytes) 18: /tmp/values382482945 (20 bytes) 19: --history-max (13 bytes) 20: 10 (2 bytes) 21: --kubeconfig=/etc/kubernetes/recommendation-api-ng-deploy-ml-staging-codfw.config (81 bytes) ERROR: exit status 1 EXIT STATUS 1 STDERR: WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /etc/kubernetes/recommendation-api-ng-deploy-ml-staging-codfw.config Error: release main failed, and has been uninstalled due to atomic being set: timed out waiting for the condition COMBINED OUTPUT: WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /etc/kubernetes/recommendation-api-ng-deploy-ml-staging-codfw.config Release "main" does not exist. Installing it now. Error: release main failed, and has been uninstalled due to atomic being set: timed out waiting for the condition kevinbazira@deploy1002:/srv/deployment-charts/helmfile.d/ml-services/recommendation-api-ng$

2023-09-08 13:37:33,415 recommendation.api.types.related_articles.candidate_finder fetch_embedding():157 ERROR -- Failed to get object from Swift

Traceback (most recent call last):

File "./recommendation/api/types/related_articles/candidate_finder.py", line 155, in fetch_embedding

swift_object = conn.get_object(swift_container, swift_object_path)

File "/opt/lib/python/site-packages/swiftclient/client.py", line 1831, in get_object

rheaders, body = self._retry(None, get_object, container, obj,

File "/opt/lib/python/site-packages/swiftclient/client.py", line 1710, in _retry

self.url, self.token = self.get_auth()

File "/opt/lib/python/site-packages/swiftclient/client.py", line 1654, in get_auth

self.url, self.token = get_auth(self.authurl, self.user, self.key,

File "/opt/lib/python/site-packages/swiftclient/client.py", line 674, in get_auth

storage_url, token = get_auth_1_0(auth_url,

File "/opt/lib/python/site-packages/swiftclient/client.py", line 524, in get_auth_1_0

raise ClientException.from_response(resp, 'Auth GET failed', body)

swiftclient.exceptions.ClientException: Auth GET failed: http://localhost:6022/auth/v1.0 404 Not Found

Traceback (most recent call last):

File "./recommendation/api/types/related_articles/candidate_finder.py", line 155, in fetch_embedding

swift_object = conn.get_object(swift_container, swift_object_path)

File "/opt/lib/python/site-packages/swiftclient/client.py", line 1831, in get_object

rheaders, body = self._retry(None, get_object, container, obj,

File "/opt/lib/python/site-packages/swiftclient/client.py", line 1710, in _retry

self.url, self.token = self.get_auth()

File "/opt/lib/python/site-packages/swiftclient/client.py", line 1654, in get_auth

self.url, self.token = get_auth(self.authurl, self.user, self.key,

File "/opt/lib/python/site-packages/swiftclient/client.py", line 674, in get_auth

storage_url, token = get_auth_1_0(auth_url,

File "/opt/lib/python/site-packages/swiftclient/client.py", line 524, in get_auth_1_0

raise ClientException.from_response(resp, 'Auth GET failed', body)

swiftclient.exceptions.ClientException: Auth GET failed: http://localhost:6022/auth/v1.0 404 Not Found

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "recommendation/data/recommendation.wsgi", line 26, in <module>

candidate_finder.initialize_embedding()

File "./recommendation/api/types/related_articles/candidate_finder.py", line 83, in initialize_embedding

_embedding.initialize(embedding_client, embedding_path, embedding_package, embedding_name, optimize, optimized_embedding_path)

File "./recommendation/api/types/related_articles/candidate_finder.py", line 111, in initialize

self.load_raw_embedding(client, path, package, name)

File "./recommendation/api/types/related_articles/candidate_finder.py", line 168, in load_raw_embedding

f = self.fetch_embedding(client, path, package, name)

File "./recommendation/api/types/related_articles/candidate_finder.py", line 158, in fetch_embedding

raise RuntimeError(f'Failed to get object from Swift: {e}')

RuntimeError: Failed to get object from Swift: Auth GET failed: http://localhost:6022/auth/v1.0 404 Not FoundIt seems definitely an issue with the config of the envoy proxy:

elukey@ml-staging2002:~$ curl https://thanos-swift.discovery.wmnet:443/info

{"swift": {"version": "2.26.0", "strict_cors_mode": true, "policies":[...CUT...]

elukey@ml-staging2002:~$ sudo nsenter -t 1682700 -n curl localhost:6022/info -H "thanos-swift.discovery.wmnet" -i

HTTP/1.1 404 Not Found

date: Mon, 11 Sep 2023 07:41:44 GMT

server: envoy

content-length: 0

x-envoy-upstream-service-time: 2On the Thanos FE nodes:

elukey@thanos-fe1001:~$ curl https://localhost:443/info -k -H "Host: thanos-swift.discovery.wmnet"

{"swift": {"version": "2.26.0", "strict_cors_mode": true, ....

elukey@thanos-fe1001:~$ curl https://localhost:443/info -k -i

HTTP/1.1 404 Not Found

date: Mon, 11 Sep 2023 08:48:41 GMT

server: envoy

content-length: 0The current flow of data is:

swift-client --> local-envoy-proxy (lift wing) -------TLS-------> envoy (thanos) ---> etc..

My theory is that the local-envoy-proxy should set the Host header, but it doesn't and it gets a 404 from the envoy running on Thanos' FE nodes.

Change 956373 had a related patch set uploaded (by Elukey; author: Elukey):

[operations/puppet@production] profile::service_proxy::envoy: set use_ingress for thanos-swift

Change 956373 merged by Elukey:

[operations/puppet@production] profile::service_proxy::envoy: set use_ingress for thanos-swift

Change 956377 had a related patch set uploaded (by Elukey; author: Elukey):

[research/recommendation-api@master] blubber: add wmf-certificates to the production image

Change 956377 merged by jenkins-bot:

[research/recommendation-api@master] blubber: add wmf-certificates to the production image

Change 956379 had a related patch set uploaded (by Elukey; author: Elukey):

[operations/puppet@production] profile::service_proxy::envoy: rename uses_ingress to sets_sni

Change 956380 had a related patch set uploaded (by Elukey; author: Elukey):

[operations/deployment-charts@master] ml-services: update Docker image for recommendation-api-ng

Change 956380 merged by Elukey:

[operations/deployment-charts@master] ml-services: update Docker image for recommendation-api-ng

Change 956385 had a related patch set uploaded (by Elukey; author: Elukey):

[operations/deployment-charts@master] ml-services: add REQUESTS_CA_BUNDLE env var to rec-api-ng's config

Change 956385 merged by Elukey:

[operations/deployment-charts@master] ml-services: add REQUESTS_CA_BUNDLE env var to rec-api-ng's config

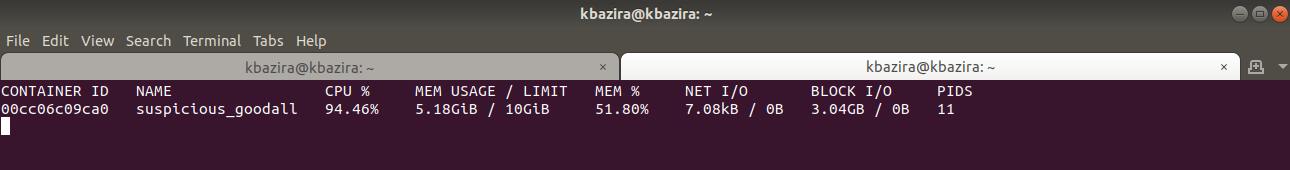

@elukey, regarding the rec-api memory usage, please see the findings below got from docker stats after monitoring the rec-api container running locally:

- When processing the embedding the memory usage spiked upwards of ~7GB.

- After the embedding had been loaded, the memory dropped and stayed within ~5GB even when I queried the api using: http://127.0.0.1/api/?s=en&t=fr&n=3&article=Apple

NB: I run the rec-api container with 10GB memory using:

$ docker run -it --memory=10g --entrypoint=/bin/bash recommendation-apiHere is a screenshot, captured from the steady-state:

@elukey, on IRC you mentioned:

one quick thing - I am reading https://github.com/wikimedia/research-recommendation-api/blob/master/recommendation/api/types/related_articles/candidate_finder.py#L167

and it is probably something that we can improve

for example, we could do the prep work offline and force np to load from file

I never done it but I am pretty sure it should be doable

could you please check if this is doable? And also update the task with all the findings etc.

I am not sure what kind of "prep work" you are referring to. Could you please, clarify? Thanks!

Change 956017 had a related patch set uploaded (by Kevin Bazira; author: Kevin Bazira):

[operations/deployment-charts@master] ml-services: increase the recommendation-api-ng memory usage

My point was that it seems, from a quick glance at the code, that we do a lot of data processing when bootstrapping the python app (via uwsgi) that maybe could be done offline. For example, what if we could:

- Take the embeddings binary (actually stored in Swift), do some processing to the data offline (and once time)

- Re-upload the binary to Swift.

- Change the Python code to just load data from the new file (without loading data in memory to preprocess etc..).

I don't think that we should deploy an app requiring 10GBs of memory for each pod, it is a 10x increase compared to most our deployments and it could shorten our k8s capacity pretty quickly if more than one pods are needed in production.

Change 956441 had a related patch set uploaded (by Elukey; author: Elukey):

[operations/deployment-charts@master] modules: add configuration 1.5.0 to mesh

The main issue I see (and the one elukey highlights) is that as a service it is not scalable. I suggest that we try to do any optimization in memory usage that we can, while maintaining the current architecture of the app(load embeddings in memory from file etc).

If we wanted to further optimize this and use a database to store the embeddings, the bottleneck would be the function def most_similar(self, word) as it required to have an array in memory to perform a dot product.

So I'd try the below things in order:

- reduce memory footprint by downcasting to 32bit or 16bit floats.

- load a memmap'ed version of the array and see how much time a dot product would take given our array.

- Last thing requires too much work and would be to use a vector db that has built in similarity search capability (e.g. elasticsearch). I'm not really suggesting this option at the moment, just jotting it down

@calbon, suggested that we use dtype=np.float32 to reduce memory usage. I have tested it and below are the results:

| dtype | on-load | steady-state |

| float | ~7GB | ~5GB |

| np.float32 | ~3.8GB | ~2.8GB |

As suggested in T339890#9156420, I run the load_raw_embedding method and saved both wikidata_ids and decoded_lines numpy arrays:

...

np.save('wikidata_ids.npy', self.wikidata_ids)

np.save('decoded_lines.npy', self.embedding)

.

.

.

root@a0efd763f796:/home/recommendation-api# ls -lh

-rw-r--r-- 1 root root 2.4G Sep 12 06:53 decoded_lines.npy

-rw-r--r-- 1 root root 107M Sep 12 06:53 wikidata_ids.npyThen loaded the 2 preprocessed files in a different container and got the following memory usage results:

| preprocessing | on-load | steady-state |

| before | ~7GB | ~5GB |

| after | ~5.1GB | ~2.8GB |

Change 956846 had a related patch set uploaded (by Kevin Bazira; author: Kevin Bazira):

[research/recommendation-api@master] load_raw_embedding: Downcast float to np.float32

A 4th memory usage test was run using a combination of the 2nd (float downcasted to np.float32) and 3rd (preprocessed numpy arrays). Below are the steps taken and the results:

1.Downcasted decoded lines to np.float32 then saved numpy arrays

...

decoded_lines.append(np.fromstring(values, dtype=np.float32, sep=' ', count=columns))

...

np.save('wikidata_ids.npy', self.wikidata_ids)

np.save('decoded_lines_float32.npy', self.embedding)

.

.

.

root@b9b4eff100a5:/home/recommendation-api# ls -lh

-rw-r--r-- 1 root root 1.2G Sep 13 05:04 decoded_lines_float32.npy

-rw-r--r-- 1 root root 107M Sep 13 05:04 wikidata_ids.npy2.Loaded the preprocessed files in a different container and got the following memory usage results:

| preprocessing | dtype | on-load | steady-state |

| before | float | ~7GB | ~5GB |

| after | np.float32 | ~1.5GB | ~1.36GB |

Nice work @kevinbazira! This is much better and we should proceed with this (I mean the combination of 32bit floats and preprocessed numpy arrays). I think we should leave further downcasting to 16 bit floats for the moment as that would require further testing of the quality of the embeddings.

Hey folks -- not to take away from the good work by @kevinbazira but I wanted to flag that I don't think it makes sense to port the embeddings component over to LiftWing as part of this work. The existing Content Translation API doesn't use the embeddings (nor does any other service) and they're quite old with no plan as far as I know to update them and maintain them. If they are incorporated into the endpoint, I presume that the quality of predictions will dramatically decrease as they'll be based on Wikidata items from 5+ years ago. The important logic is the code that hits the Search APIs and filters appropriately based on what articles exist in the target language (see T308165#7983559).

Some further context:

- My digging into which services were being used by Content Translation which also notes the embeddings aren't on the recommendation API instance being used by Content Translation: T308165#7983559

- Further stats showing the embedding-backed endpoint isn't being used by any services: T340854#8998466

Ccing @santhosh as well for visibility / input as needed.

Thank you for sharing this information, @Isaac.

When we were containerizing the Flask app that runs this recommendation-api, we ran into errors where the application expected to load embeddings as shown in T338805#8926847 and T338805#8931200.

Is there a way to run this Flask app without throwing those errors (without rewriting the whole app:)?

If so, that would be great, as it would enable us to migrate the recommendation-api from wmflabs to LiftWing without the embeddings.

When we were containerizing the Flask app that runs this recommendation-api, we ran into errors where the application expected to load embeddings as shown in T338805#8926847 and T338805#8931200.

@kevinbazira thanks for this additional context -- I wasn't aware of the errors / discussion around getting it running before attempting to make changes and that helps with understanding what's useful.

Is there a way to run this Flask app without throwing those errors (without rewriting the whole app:)?

Yep! In the recommendation.ini file, there's an enabled services section and you can switch related_articles to False (and gapfinder too if you'd like). Doing that locally for me got it running (though I was on a Python3.10 environment so I also had to replace a bunch of flask_restplus imports with flask_restx FYI). And to my point about the embeddings not being used, turning off that endpoint won't cause any issues for end-users and in fact I verified that it's set to False on the Cloud VPS instance running it (tool.recommendation-api.eqiad1.wikimedia.cloud:/etc/recommendation/recommendation.ini).

@Isaac, thank you for the pointer. I have tested the recommendation-api with related_articles switched off and it ran without the errors.

Below are the steps I took and the results achieved:

# run container

$ docker run --memory=10g -p 80:5000 -it --entrypoint=/bin/bash recommendation-api

...

# switch related_articles to False

$ vi recommendation/data/recommendation.ini

...

# start application

$ uwsgi --http :5000 --wsgi-file recommendation/data/recommendation.wsgi

...

# query api in new terminal

$ curl 'http://127.0.0.1/api/?s=en&t=fr&n=3&article=Apple'

[{"title": "Ceratotheca_sesamoides", "wikidata_id": "Q16751030", "rank": 499.0, "pageviews": 133}, {"title": "Annona_montana", "wikidata_id": "Q311448", "rank": 498.0, "pageviews": 601}, {"title": "Treculia_africana", "wikidata_id": "Q2017779", "rank": 496.0, "pageviews": 308}]Change 958971 had a related patch set uploaded (by Kevin Bazira; author: Kevin Bazira):

[research/recommendation-api@master] switch off related_articles endpoint for the LiftWing instance

Change 958971 merged by jenkins-bot:

[research/recommendation-api@master] switch off related_articles endpoint for the LiftWing instance

I have tested the recommendation-api with related_articles switched off and it ran without the errors.

Great to hear! Thanks!

Change 956017 merged by jenkins-bot:

[operations/deployment-charts@master] ml-services: update recommendation-api-ng image