We are going to migrate the requests from flink to the mediawiki api to go to mediawiki on kubernetes instead than to the traditional api cluster.

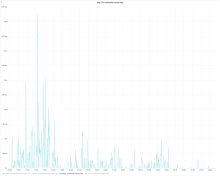

Given flink does on average about 20 rps per datacenter, we should be able to add resources in line with mw-api-ext or less.