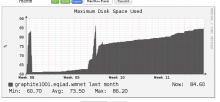

New report as of 2017/06/21. eventstreams is tracked at T160644: Eventstreams graphite disk usage already

root@graphite1001:/var/lib/carbon/whisper# du -hcs /var/lib/carbon/whisper/* | grep G | sort -rn 433G /var/lib/carbon/whisper/eventstreams 199G /var/lib/carbon/whisper/servers 199G /var/lib/carbon/whisper/instances 137G /var/lib/carbon/whisper/ores 71G /var/lib/carbon/whisper/MediaWiki 50G /var/lib/carbon/whisper/zuul 46G /var/lib/carbon/whisper/varnishkafka 35G /var/lib/carbon/whisper/frontend