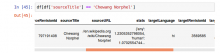

Building on this notebook (https://paws-public.wmflabs.org/paws-public/User:Isaac_(WMF)/Content%20Translation%20Example.ipynb ), start to explore what types of content is translated and what happens to these articles once they are created.

Some starting examples include trying to better understand what happens to the translated article after it is created. The page history for every Wikipedia article is publicly available. Each article also has a corresponding talk page, in which editors might discuss the content on the page and other related items. If you are unfamiliar with how to access this content, see these overviews of how to access page history (https://en.wikipedia.org/wiki/Help:Page_history) and talk pages (https://en.wikipedia.org/wiki/Help:Talk_pages)

For example, for the English version of Gradient Boosting, these can be found at:

- History: https://en.wikipedia.org/w/index.php?title=Gradient_boosting&action=history

- Talk: https://en.wikipedia.org/wiki/Talk:Gradient_boosting

Go through the edit histories for a few articles and begin to identify whether any trends emerge about the types of edits that happen to translated articles. Compare the translated and source articles in their current state. What types of content were added after the translation? Are the articles diverging in content or staying similar? What sorts of discussions occur on the talk pages of translated articles?

Eventually we can do this in a more robust manner: more carefully choosing which articles to examine, developing more concrete questions to answer, building a code book for annotating article histories, content, or discussions, etc.