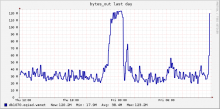

For the past few days, we've been seeing s4 slaves saturate their network port (1GbE) occasionally, in spikes that effectively quadruple their normal network usage.

As far as I can see, these can be attributed to img_metadata queries about Djvu files, all coming from search engine IPs (i.e. Googlebot/MSNbot crawling some URL).

Example from a db1070 processlist a moment ago:

SELECT /* ForeignDBFile::loadExtraFromDB 207.46.13.84 */ img_metadata FROM `image` WHERE img_name = 'Dictionary_of_National_Biography_volume_27.djvu' AND img_timestamp = '20121213235036' LIMIT 1 SELECT /* ForeignDBFile::loadExtraFromDB 66.249.67.12 */ img_metadata FROM `image` WHERE img_name = 'Popular_Science_Monthly_Volume_68.djvu' AND img_timestamp = '20090517032649' LIMIT 1 SELECT /* ForeignDBFile::loadExtraFromDB 66.249.67.20 */ img_metadata FROM `image` WHERE img_name = 'United_States_Statutes_at_Large_Volume_116_Part_1.djvu' AND img_timestamp = '20110920010238' LIMIT 1 SELECT /* ForeignDBFile::loadExtraFromDB 66.249.67.20 */ img_metadata FROM `image` WHERE img_name = 'Title_3_CFR_2000_Compilation.djvu' AND img_timestamp = '20111001220528' LIMIT 1 SELECT /* ForeignDBFile::loadExtraFromDB 66.249.67.4 */ img_metadata FROM `image` WHERE img_name = 'United_States_Statutes_at_Large_Volume_115_Part_3.djvu' AND img_timestamp = '20110701153753' LIMIT 1 SELECT /* ForeignDBFile::loadExtraFromDB 66.249.67.20 */ img_metadata FROM `image` WHERE img_name = 'The_Methodist_Hymn-Book_Illustrated.djvu' AND img_timestamp = '20081102105445' LIMIT 1 SELECT /* ForeignDBFile::loadExtraFromDB 66.249.67.20 */ img_metadata FROM `image` WHERE img_name = 'The_Lusiad_(Camões,_tr._Mickle,_1791),_Volume_1.djvu' AND img_timestamp = '20100612172724' LIMIT 1 SELECT /* ForeignDBFile::loadExtraFromDB 66.249.67.20 */ img_metadata FROM `image` WHERE img_name = 'Riders_of_the_Purple_Sage.djvu' AND img_timestamp = '20130615001915' LIMIT 1

The output of e.g. the first query is a 2.5MB <mw-djvu> XML; similar for the rest, which explains the network saturation.