What's requested:

For upload/editing/viewing features:

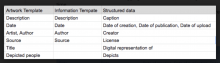

- Quarterly comparison of metadata on files with a common template, such as the information template and artwork template.

- Comparison of editing on file pages with and without structured data 60 days or more after the file is uploaded.

- Quarterly measurement media containing structured fields using non-English languages.

For search features:

- Clickthrough rate

- Clicks or scrolls to more results

Why it's requested:

To evaluate how we're doing on these grant metrics:

https://commons.wikimedia.org/wiki/Commons:Structured_data/Annual_Plan_2018-19#CDP_Targets

When it's requested:

As early as possible in Q2, and then again in Jan 2020.

Other helpful information:

I feel like I made a task for this a few months ago, but now I can't find it. If there is another task with the same requests, please do merge :)