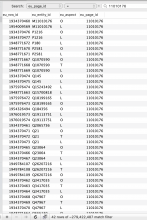

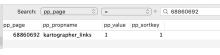

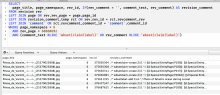

@Abit needs data about how many file pages on Commons contain at least one structured data element. Ideally I could check every day, but we'll need to know every couple weeks at least.

We cannot use rely solely on the search results for this data because we are still tweaking the search indexing and the search results have been incorrect several times, in one case wildly incorrect. (Showing 200,000 captions instead of 1.7 million iirc).