Description

Sanity check the new search experience

Goals

- To see if there are any major usability issues that we may have overlooked

- To see the breakdown in terms of how people submit their search:

- (a) click on a suggested result

- (b) press enter on their keyboard

- (c) click the Search button

- To see what people think the “Search” button will do

- To see what people think the “Search pages containing X” will do

Study

On usertesting.com we had people do various tasks, some of which required them to search for various articles (without explicitly telling them to use the search box). There were two groups:

- Group 1

- 17 people

- People in group 1 searched for Egypt, Cave art, Banana, and Purple

- Group 2

- 15 people

- People in group 2 searched for Electricity, Banana, Willow trees, Romeo and Juliet, and Purple

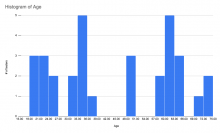

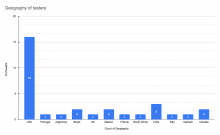

There was a mix of ages and geographies in both groups:

| age breakdown | geography breakdown |

Findings

- 0 people had issues using search

- There were a total of 117 searches submitted:

- 86 were submitted via a suggested result

- 19 were submitted via the enter key

- 12 were submitted via the Search button

- Regarding what people thought clicking the Search button would do:

- 16 of 23 people who answered thought it would take them to the first result

- 7 of 23 people who answered thought it would take them to a list of results

- note: all people assumed that enter and the Search button do the same thing

- Regarding what people thought clicking the Search for pages containing Purple would do:

- 25 of the 25 people who answered thought it would take them to a list of pages that have the word "purple" in them

Notes:

- in the first study people started on the Pancake article and were then asked to go to the article about Egypt. It was ambiguous how they were supposed to get there. 7 out of 10 people tried to find an "Egypt" link within the Pancake article

- one person wondered why the Cave art search result didn't have an image — made me wonder if the articles that don't have images look lower quality next to the ones that do?

- in two cases the search results loaded quite slowly and the people used the Search button

- few people used their keyboard to navigate down to suggested search results (maybe 2 or 3)