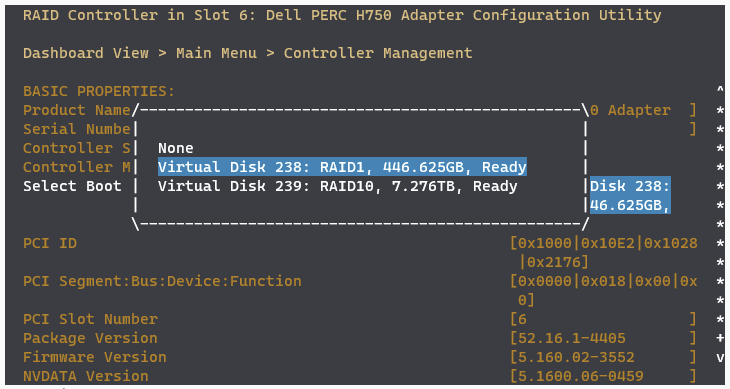

Dell is forcing an update off the older PERC 730 controller up to the new H750 controller.

https://www.dell.com/en-sg/work/shop/perc-h750-adapter-low-profile-full-height/apd/405-abce

In the past, when we shifted to the H740, we had driver issues. Since we'll have to update to the H750, we should have driver support confirmed.

LINUX PERCCLI Utility For All Dell HBA/PERC Controllers: https://www.dell.com/support/home/en-us/drivers/driversdetails?driverid=j91yg

Testing Details

2022-04-21 Rob successfully tested on dumpsdata1007 with "PercCli SAS Customization Utility Ver 007.1910.0000.0000 Oct 08, 2021" on 5.16.11-1~bpo11+1 - raid1 OS array was setup for installation, had a disk removed while the OS was live (after being set to offline), and then had it installed and auto rebuilded back to optimal status. - cadence is to set it to offline which now handles missing/spindown and no need to do those steps.