As you can see here https://grafana.wikimedia.org/d/FMKakEyVz/hnowlan-thumbor-k8s?orgId=1&viewPanel=61&from=now-7d&to=now

the rate of 429s emitted by thumbor in eqiad rose by about one order of magnitude on may 24th.

This seems to be mostly localized to rendering of multipage documents and in particular djvu files.

The impact of this bug is that work on wikisource is heavily disrupted:

https://en.wikisource.org/wiki/Wikisource:Scriptorium#ocrtoy-no-text

https://en.wikisource.org/wiki/Wikisource:Scriptorium/Help#Changes_in_the_past_week,_affect_the_Page_namespace.

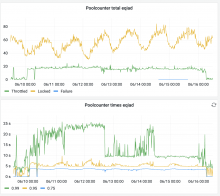

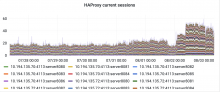

I have verified that the 429s are emitted by thumbor itself and that thumbor reports rate-limiting by poolcounter. I don't have enough confidence with thumbor on k8s to pinpoint what the problem is, though.