For analytics/refinery/source, we have a couple job to automatize releases to Archiva. They should be migrated to Docker containers.

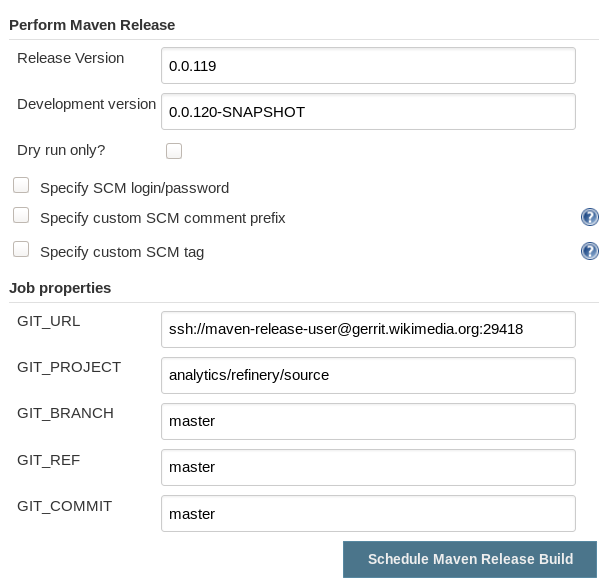

- analytics-refinery-release: https://integration.wikimedia.org/ci/job/analytics-refinery-release/

- analytics-refinery-update-jars: https://integration.wikimedia.org/ci/job/analytics-refinery-update-jars/

Potentially, we could use the same mechanism for other maven based repositories whic @Gehel suggested a while back.