On 2018-04-09 FlaggedRevs on Hungarian Wikipedia was reconfigured: before, articles were displayed to readers in their last reviewed version, afterwards articles were always shown in their latest version and FlaggedRevs was only used to coordinate patrolling activity. Now that the test period for the change is over, we need to evaluate the effect and decide whether to keep the change.

The main questions to answer:

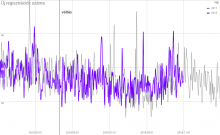

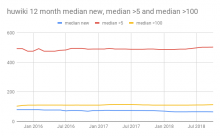

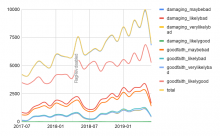

- did the ratio of problematic edits increase after the change?

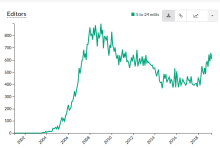

- was new editor retention affected?

The secondary question would be to quantify how often readers saw bad changes due to the new configuration, and how often they didn't see new changes due to the old configuration.

You can find the analysis here.