Overall, enabling no-transform is probably a good thing as it's a signal to all proxies that we are against any transformation (eg. injecting ads...): https://tools.ietf.org/html/rfc7234#section-5.2.1.6

Description

Details

| Subject | Repo | Branch | Lines +/- | |

|---|---|---|---|---|

| varnish: Set Cache-Control: no-transform header | operations/puppet | production | +1 -1 |

Related Objects

- Mentioned In

- T336715: Investigate relation of Prefetch feature to increase in automated traffic and impact on unique devices

T298166: Work out a strategy on Yandex's Turbo Pages

T262723: Investigate whether KaiOS web traffic decline is due to Google Web Light

T218202: Investigate how Chrome Lite Pages affects us - Mentioned Here

- T336715: Investigate relation of Prefetch feature to increase in automated traffic and impact on unique devices

T341848: Consult on investigation of relation between UA deprecation and increase in automated traffic

M100: Random card interaction

T136802: [Epic] Make Wikipedia work on remote browsers (UC mini and Opera mini)

T298166: Work out a strategy on Yandex's Turbo Pages

T209572: Feature Policy Reporting origin trial

rMW9dd76898da94: Add googleweblight to JS blacklist

T152602: Spurious Amazon clicks / Banners on googleweblight.com

Event Timeline

I don't have my Android device handy, but this is not applying Google Web Light, correct? Would it be possible for someone to post screenshots of the resultant treatment for our pages?

If I understand correctly, users need to actively enable this feature and it sounds like the hope is that the UI in Chrome settings would be clearer about the interception of the request.

Looping in @JKatzWMF and @atgo, as we've been looking at Google Web Light trends. Active disablement of data saver by the no-transform rule would probably have the side effect of disabling Google Web Light, plus potentially affecting Android Go users.

We should probably meet as a group to discuss further to figure out potential options.

This appears to be quite similar to a thing called "Google Web Light".

- https://en.wikipedia.org/wiki/Google_Web_Light

- T152602: Spurious Amazon clicks / Banners on googleweblight.com

- https://googleweblight.com/?lite_url=https://en.wikipedia.org

- https://support.google.com/webmasters/answer/6211428?hl=en

- rMW9dd76898da94: Add googleweblight to JS blacklist / https://gerrit.wikimedia.org/r/327043

@dr0ptp4kt Ha, comment clash.

Indeed, this is not the same thing. Chrome Lite is a transparent transformation Chrome applies to any URL you're browsing if the user qualifies (right now I believe the qualification is you have enabled "Data Saver" mode or are on effectively 2g-slow connection).

The address bar url does not change, there is no redirect. It's not correlated with Google search results linking to something different (afaik).

Its output and visual appearance are also different.

Google Web Light has its own brand and layout that always looks the same (hamburger menu, first-level domain name, info button, then plain content extracted somehow without styling, and low-res images).

Chrome Lite appears to mostly keep the original layout and styles in-tact, but strip a bunch of scripts and styles it considers optional, and makes images clickable placeholders.

Compare:

| Google Web Light | Chrome Lite |

|---|---|

@Peter can probably provide a screenshot of the settings where this is enabled, where it’s indeed not mentioned anywhere that enabling it means Google gets to intercept full URLs of everything you visit. In fact Google misleads the user into thinking that we served the request, since our domain is displayed in the URL bar, not Google’s.

For me it looks like this, the popup happens when I force 2g connections, so not sure when it happens for real users:

Also they fixed the missing search/navigation in Timos screenshot after I tweeted about it. The documentations says they can apply different rules on different places, so not sure the behavior is the same for everyone as on my phone. As in the documentation in the description: some changes happens browser side, some server side (but showing our URL).

They actually added a small popup too now first time you visit a "Lite page":

I've updated to stable 73 today since it become available in Play store.

Maybe we can work our way out of this by telling Google that they can't display our URL for content that comes from their servers without our approval? There might be legal grounds for this, even.

It seems like in the screenshot from @Peter it's now fairly clear about the proxying. Obviously the visual nudge is to actually turn on the feature, but it seems like the terminology is pretty clear. In this regard, this makes it more on par with something like Opera.

I would prefer if the wording "Lite page provided by" gets changed to "Lite page served via". The introduction of the clear brand identifier was intentional, which I suspect has partly helped users distinguish their browsing context, and so it would be helpful to ensure that people don't get confused about the actual content provider.

One thing I would like to understand is if we can measure this access in our web logs. It would be problematic to not be able to understand the impact of the traffic. Ideally it would be nice to know how often users are hitting this condition, as it potentially suggests that we should figure out an intervention in our serving approach.

@dr0ptp4kt in https://blog.chromium.org/2019/03/chrome-lite-pages-for-faster-leaner.html is says it supports the https://developers.google.com/web/updates/2018/09/reportingapi (adding a URL as a report header) but I haven't looked into it.

We could ensure that people aren't confused with a survey aimed at users who have this turned on. But I suspect that Google's reprocessing removes all of our javascript, seeing how extreme it looks. And we need a way to detect when it's Google's proxy making the request. As it stands it's unclear whether they use a different Google bot for this, or if they repurpose the HTML they already index for their search engine (the latter would mean we can't even inject HTML for the Lite case).

The ability to serve a custom message to Lite pageviews would be critical if the outcome of such a survey is that a majority people have no idea that their full browsing history is being recorded by Google as a result of this feature. While the messages that Peter has shown makes the data pipeline clear to us, it requires a certain level of computer literacy to figure that out, particularly when the URL you see is a Wikipedia one. It's not like the feature constantly reminds you of the privacy implications of the setting you turned on once upon a time.

The Reporting API is simple to use, what needs building is an ingestion point for it. For Feature Policy Reporting: T209572 my plan was to feed those (other types of reports, also using the Reporting API) to logstash, but you probably want to send it elsewhere for what constitutes a different kind of pageview.

Hmm, I enabled it again today to test it out and now the "Lite" bubble is removed and there's nothing signal that I'm using a Lite page. I could see that I'm on a Lite page though since no images is loading. Have we seen any drop in traffic since it was released?

We got feedback in the upstream issue that there's no way to test today but they will add a flag: https://bugs.chromium.org/p/chromium/issues/detail?id=956685#c13

In Chrome 77 it will be possible to "try" it out again: https://cs.chromium.org/chromium/src/components/previews/README

Wanted to add one concern that I raised in the upstream issue for Chromium: There's a risk that the lite pages gives our user worse performance and lock in the user in the Google universe. Let me explain.

When the user access Wikipedia through Chrome, Lite pages will kick in if the page takes more than X page to render. When that's the case, no responses will stored in the browser cache. That means that the next page that the user visits will be a cold cache again and will take longer time to render, and the Lite page can kick in again. And the same will happen all over again. The normal behavior for users accessing Wikipedia on a slow connection is that the first page is slow, but then we have stored JavaScript and CSS in the browser that makes the next page view faster.

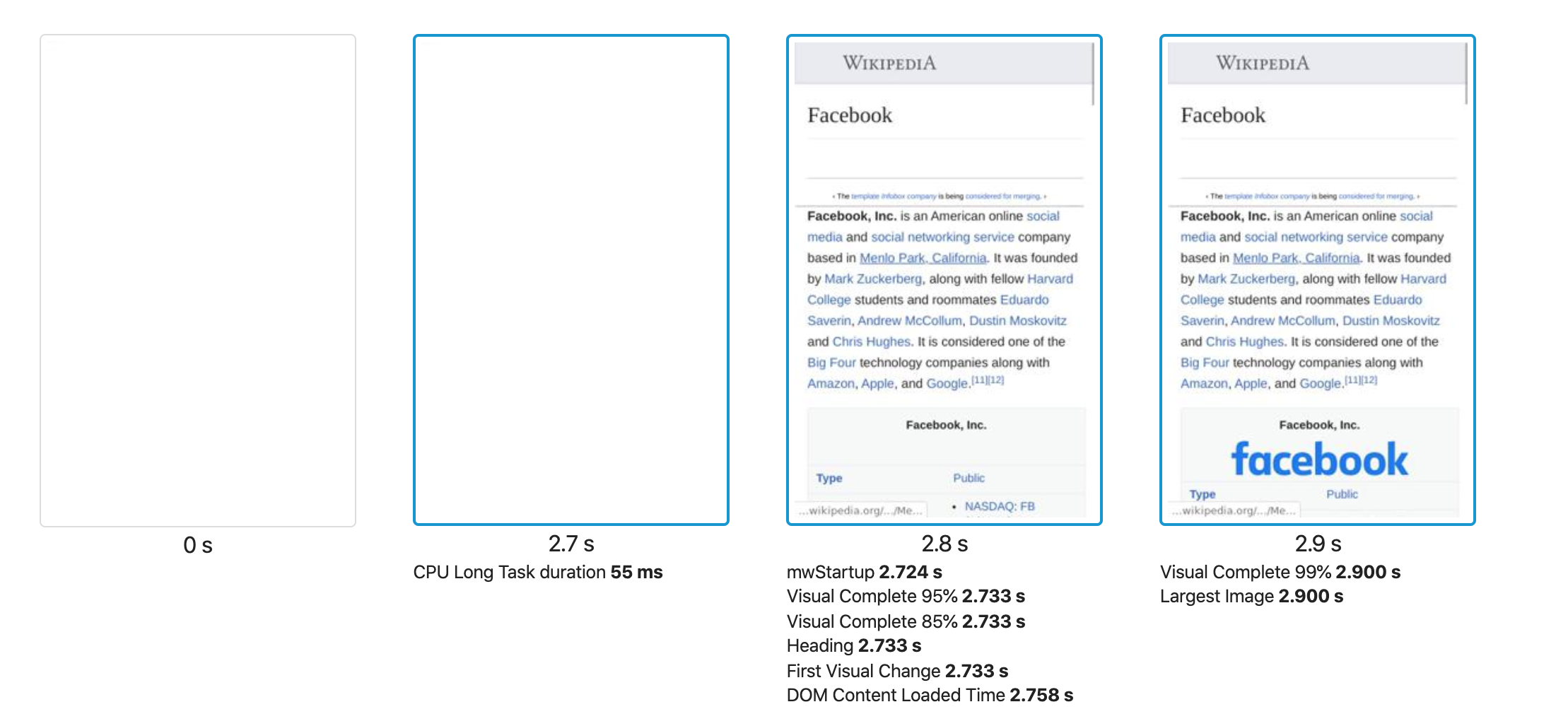

What does it look like? If a user hit our Facebook page on emulated 3g connection the page will start render at 2.8 seconds.

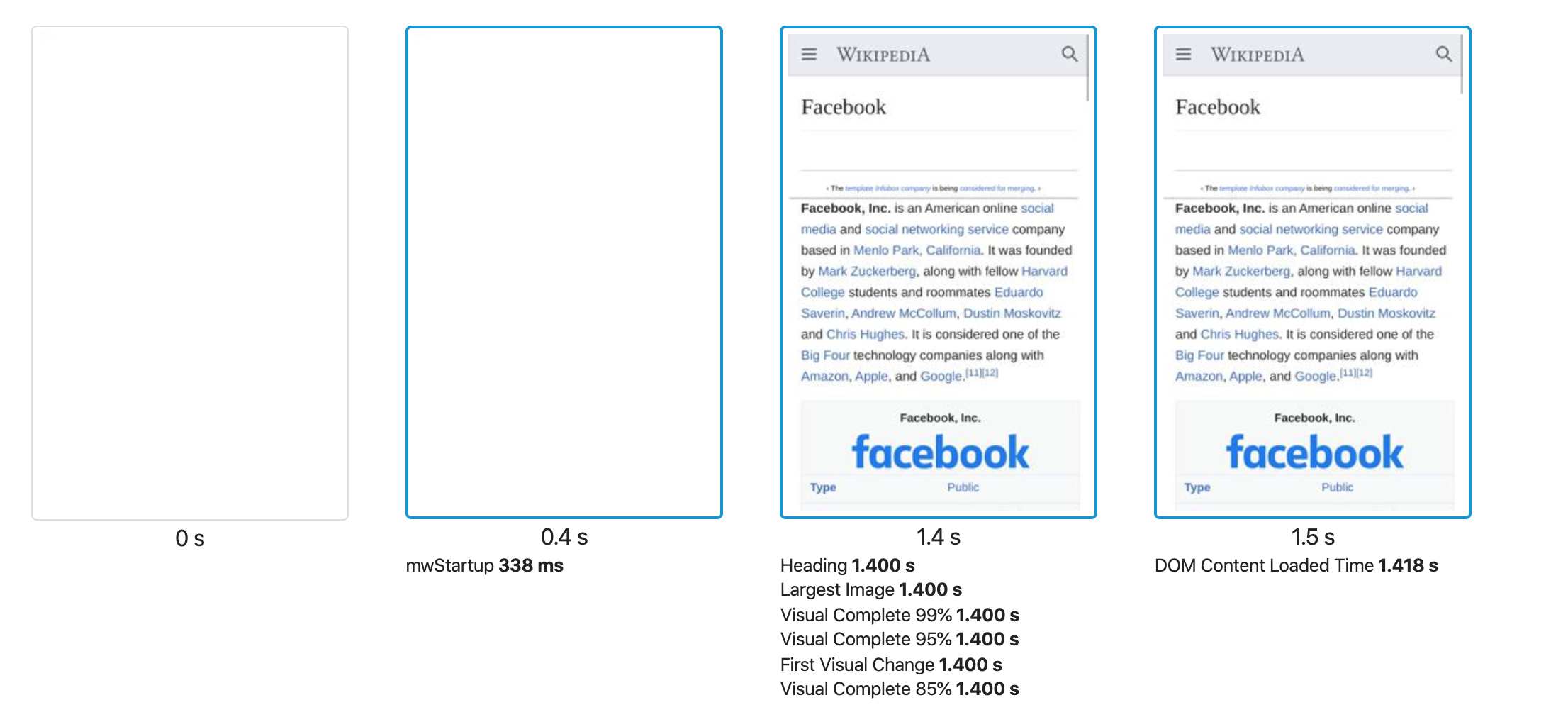

If the user instead comes from the main Wikipedia page, and then hit the Facebook page with same connection type, the page starts to render at 1.4 s. That's because we already cached content in the browser.

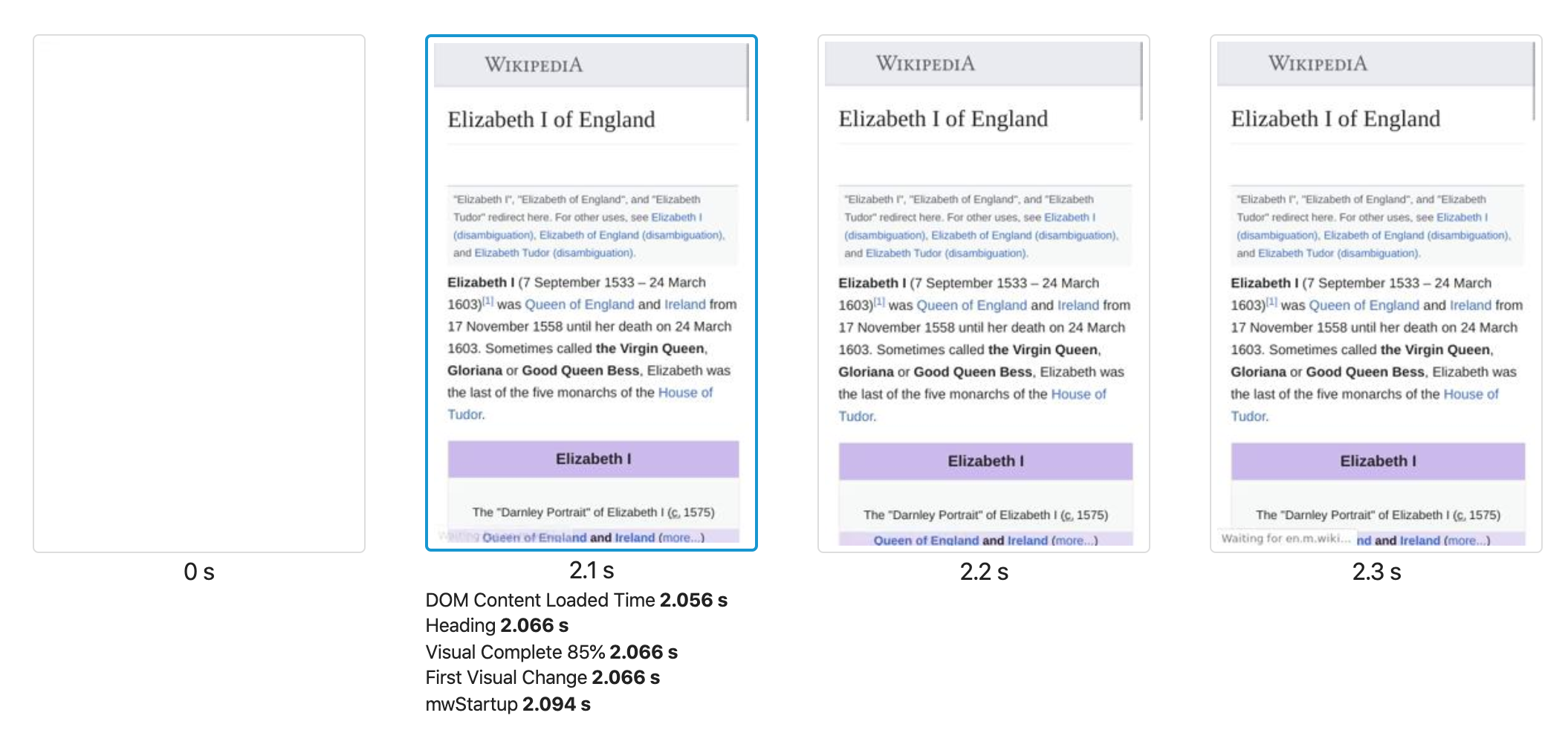

This will be same for all pages. Here's another example. The user goes directly to https://en.m.wikipedia.org/wiki/Elizabeth_I_of_England:

But if the user first visits https://en.m.wikipedia.org/wiki/Edward_VI_of_England and then after that goes to https://en.m.wikipedia.org/wiki/Elizabeth_I_of_England:

The cache helps us render the page for 0.4 s instead of 2.1. The browser cache is really important for us to make pages render fast for the user, and that gets bypassed/removed when user hit a Lite page.

I think this largely depends on the heuristics Google uses to determine whether the feature should turn on. With all of it being a black box, it's indeed possible that something like you describe could happen.

Just want to share that when updating to latest Chrome on my Android phone, I get pushed updating to Lite mode/lite pages:

I think this makes a lot more people use it than if you let them enable it by themself.

Hi all -- I wanted to check in on this ticket to see if anyone knows why the discussion for this stopped back in 2020 (I assume just got lost in early pandemic adjustments). Another instance of a lite technology (this one from Yandex) has popped up (T298166) and I'm advocating for trying a general solution to these like the no-transform header discussed here. No one clearly owns this ticket unfortunately so I'm not sure where the responsibility lies. But I'd welcome any thoughts on what it would take to add the header (or reasons why we shouldn't)?

Hi @Isaac if I remember correctly no-transform was stopped by management, I can check if I can find anything in my emails (it could have been a meeting though).

I don't remember exactly how it works right now, do we report data through https://developers.google.com/web/updates/2018/09/reportingapi? I guess is the only way to know how many users that get the Google Lite pages. that would be interesting to know before we change anything.

if I remember correctly no-transform was stopped by management, I can check if I can find anything in my emails (it could have been a meeting though).

Thanks for chiming in @Peter! Yeah, I'd love to hear the rationale for that if you can find it (or someone can corroborate). My scanning of tickets seemed to suggest there was broad consensus around these technologies that Wikipedia was already quite lightweight and we should just be implementing improvements on our end as opposed to relying on external transformations.

I don't remember exactly how it works right now, do we report data through https://developers.google.com/web/updates/2018/09/reportingapi?

We do seem to have a report-to header that points to https://intake-logging.wikimedia.org/v1/events?stream=w3c.reportingapi.network_error&schema_uri=/w3c/reportingapi/network_error/1.0.0 but I don't know if that's helpful for answering your question. I don't know really anything about Google Lite but we do have some data on Google Weblight (a very similar technology but one that does ping our servers when it transforms pages; though not fully clear to me if it does it everytime or caches transformed content for up to 24 hours per their documentation). When I try to load a Googleweblight page per their directions, I just get redirected to Wikipedia. Hard to know if this is specific to my setup or if it really doesn't work for any users right now. That said, we see on the order of 2.5 million pageviews from Google weblight per day (the useragent for their webrequests have weblight in them, so easy to identify though we can't say much more than that because the IP address is for Google servers; sample query below), so that's at least a lower-bound on how many pageviews come through this sort of service. My main question -- and what I can't check because I can't get Google Weblight to load for me -- is how much faster those pages actually load. My intuition is these technologies don't reduce page size enough to warrant the other privacy, analytics, etc. issues they can introduce. I did check en:Yandex vs. Yandex-hosted enwiki turbo page and our version was smaller / fewer requests / loaded faster but that's an n of 1.

SELECT

COUNT(1) AS num_requests

FROM wmf.pageview_actor

WHERE

year = {year}

AND month = {month}

AND day = {day}

AND is_pageview

AND user_agent LIKE '%weblight%'Hi @Isaac I didn't have any email about it so it was a meeting only decision.

I've been thinking about this and I think it's good idea to add the no-transform and let me know if I can help out in any way.

My main question -- and what I can't check because I can't get Google Weblight to load for me -- is how much faster those pages actually load.

I'm pretty sure they can make Wikipedia faster than we serving them but as long as they (proxy browser/light) convert our pages, we will not get any statistics of how slow our pages are, so there will be no incentive for us to make them faster. A couple of years ago I tried to measure the difference but no luck at that time + it is actually really hard to make sure you compere them correct.

We have two problems with the speed for some of our users:

- Latency. Google and others can serve content closer to our users. If we have more cache locations we could fix that.

- We serve too much data on mobile. It's not only the amount of JavaScript but also the amount of HTML (see https://bugs.chromium.org/p/chromium/issues/detail?id=849106I) If you are on a slow device and a slow network, the pages will be slow. The best way to fix that is IMHO to do our own version of https://engineering.linkedin.com/blog/2018/03/linkedin-lite--a-lightweight-mobile-web-experience - that would also be the best way for us to make sure Wikipedias content are accessible from all around the world and would be a perfect goal for the next year :D

My intuition is these technologies don't reduce page size enough to warrant the other privacy, analytics, etc. issues they can introduce.

I totally agree. Through the years there's been problems (see T136802) with the content and I'm not sure if we run any tests at the moment to verify that Wikipedia works in proxy browsers? For Chrome Lite there hasn't been a way either to test it (see https://bugs.chromium.org/p/chromium/issues/detail?id=956685).

With Chrome Lite there's also a lock in effect that are neglected (I asked about in the same bug report https://bugs.chromium.org/p/chromium/issues/detail?id=956685). The problem is that we rely on content being cached in the browser and if Chrome starts to serve content if we are "too slow" every page view will be slower since content are cached in the browser and the Lite version will continue to be served.

Hi all!

I tend to agree with @Peter's various rationales that adding Cache-control: no-transform to our user-facing header outputs is probably the right move at this time.

Cache-control is fairly important and does a ton of overloaded jobs at every layer: we already have lots of functional signalling over this header from various application layers to our traffic caches for important cases, and our traffic caches ingest this and also modify it internally and modify it again on the user-facing side in various conditional ways for important reasons as well. Given these complexities and the nature of the need here, it would probably make the most sense to have our traffic cache layers be the ones to inject no-transform universally into our user-facing output headers. I would be fairly trivial in terms of tech work at our level to add this.

However, some concerns should possibly be considered and/or addressed:

- There was a reference to some earlier managerial decision to avoid the header? It was at least years in the past at this point, and possibly tech circumstances and/or managerial opinion has changed. If there's no known rationale and no known record of the decision, though, it's hard to give it much weight, unless someone knows someone specific they can ask about this.

- There's a reference (from 2016, so it take it with a grain of salt) that Google used to say openly that CC:n-t might negatively impact search results, referenced in this SO comment: https://stackoverflow.com/questions/20134257/any-reason-not-to-add-cache-control-no-transform-header-to-every-page#comment60132684_31963165 . The reference link they provide there no longer contains that language. Is there any concern on this front?

- Are there any known cases (specific APIs or URL subspaces in general) where there's some reason we really need to *avoid* adding this header, for some reason not immediately apparent to me? I can't think of any specific case where it would be harmful, but perhaps I'm lacking imagination!

I'm not aware of prior management decisions or risk assessments to prohibit no-transform and I'm only seeing an upside to making this change. Seems like the evidence we have suggests performance issues are negligible, increased analytics in areas where we are seeking growth and we have improved security and privacy by not proxying and this feels like a relatively easy fix. I'm supportive of making this change happy to discuss other options but in the interest of mitigating immediate concerns and risks this seems like a good fit so can we go ahead and add no-transform?

Yandex doesn't indicate that it will adhere to this particular header.

If others agree with my analysis that this is not the result of a general optimizer transformation, but an intentionally built product by Yandex, then I think it may be in our best interest to try to contact Yandex first. I would not be surprised if eg. Yandex would perceive our change as a bug and consider it as breaking their app, and then override the signal.

I support the proposed technical change, which we believe has a chance of disabling Yandex's Wikipedia viewer. The brand, performance, stat impact etc seem clear and its unlikely we'd want to collaborate on anything other than turning it off. But I also think this is non-urgent and worth a shot to let them know, give them a chance to explain and perhaps for them to turn it off first, as a courtesy and future investment. We can still go ahead with our no-tranform header after that to keep it off (if the Yandex system supports that).

Before we touch no-transform I'm requesting that @Maryana and team make a connection with the downstream orgs to see if we can get a read on things.

If we can't get a satisfactory read, I'm unopposed to no-transform experimentation, but would you please share the experimentation plan with me, @kzimmerman, and @ovasileva for review?

This is also interesting, the coming Chrome prefetch proxy on Android is rolling out as a test: https://github.com/buettner/private-prefetch-proxy

Chrome Lite pages will be removed with Chrome 100 https://support.google.com/chrome/thread/151853370/sunsetting-chrome-lite-mode-in-m100-and-older?hl=en - that will be released in March 29, that's why we do not need to add no-transform.

Argh I was confused. Chrome Lite (=you turn it on in Chrome on Android) is removed in Chrome 100, not the Lite pages. Sorry!

Hi all, we received some info from Google which may help inform this decision.

Chrome technically does still have a "Chrome Lite Mode" feature, which was previously known as "Data Saver". However, as noted by @Peter noted, that feature has been removed with Chrome M100.

There are also "Google Lite Pages", otherwise known as WebLite. This is a Google Search feature that creates simplified versions of websites, and links to those sites from Google Search instead of the original page. Google believes that feature is technically still active, but if it still exists, it is in maintenance mode.

For a short time, these Google Lite Pages were served to users with Chrome Lite Mode enabled. But that feature has been gone for years now and only ever worked on http:// pages, not https:// (so it shouldn't impact Wikipedia).

All to say that we shouldn't have seen an impact from Chrome Lite as of March 29th, and switching on no-transform is not expected to have a negative impact on Wikimedia search ranking or other product experiences. So we'll leave it to the Traffic and Security folks to decide whether or not they'd like to implement no-transform moving forward.

I've adjusted the title/body to reflect this change in ticket scope. Traffic still needs to determine whether or not to pursue this.

Change 917954 had a related patch set uploaded (by BCornwall; author: BCornwall):

[operations/puppet@production] varnish: Set Cache-Control: no-transform header

I got in contact with @Maryana and they found an email thread on the subject last March. From Mr. Perry:

Yandex has responded to say they’ve removed Wikipedia from their search results for users of their Turbo pages (i.e. light experience).

Their search team has also passed our request on to additional Yandex teams who may be surfacing Wikipedia within their light experiences. They believe these instances will also be discontinued shortly. I’ll follow up again once I receive confirmation that has happened.”

@BBlack, @dr0ptp4kt With this in mind, is this something we still want to pursue for staving off similar future mangling?

@BCornwall thanks for the prompt - no strong opinion here. I'm looping @SCherukuwada and @ovasileva in case they have any take from a core experiences perspective.

Setting this as stalled since there's been no response from @Vgutierrez, @ssingh, @SCherukuwada or @ovasileva.

@BCornwall and others -- I want to connect a separate but related thread here because we have all the right people to make these decisions and implement the changes but happy to split this into a separate ticket if need be.

Based on some analysis of reader traffic, it seems that Google Chrome has been doing some pre-fetch of our site content for a while in an effort to speed up the reader experience. There is a way to turn off this pre-fetching if we're concerned about it. You can see details about the potential scale in this ticket (T341848#9014932) and more details about the feature in this blogpost: https://developer.chrome.com/blog/private-prefetch-proxy/

In particular, linking to this section which has details about how to configure/disable this pre-fetching if that's a route we want to go down.

@Isaac Sorry for the horrendous response timing. I think that this would be best created in a new ticket. Thanks for bringing it up, though!

Sorry for the horrendous response timing. I think that this would be best created in a new ticket. Thanks for bringing it up, though!

Hah, no worries. I was out the last few weeks so am a bit out of the loop on this one but I think the right questions are being asked at T336715#9137875 so I'm going to let that play out before splitting out a new ticket.

@BBlack seems to agree that this header belongs. @Vgutierrez, do you still have reservations of this addition?

The scope of this ticket's decision-making goes well beyond the Traffic team. As such, we need more cross-functional input before we can merge this patch. Please add any other teams that would be worth including in this ticket. For now, Traffic is unable to action this any further and we'll change our priorities as such. Thanks!