Took more than hour to do the cdb rebuild:

2019-05-14 - production 14:47 <hashar@deploy1001> Finished scap: testwiki to 1.34.0-wmf.5 and rebuild l10n cache (duration: 62m 47s)

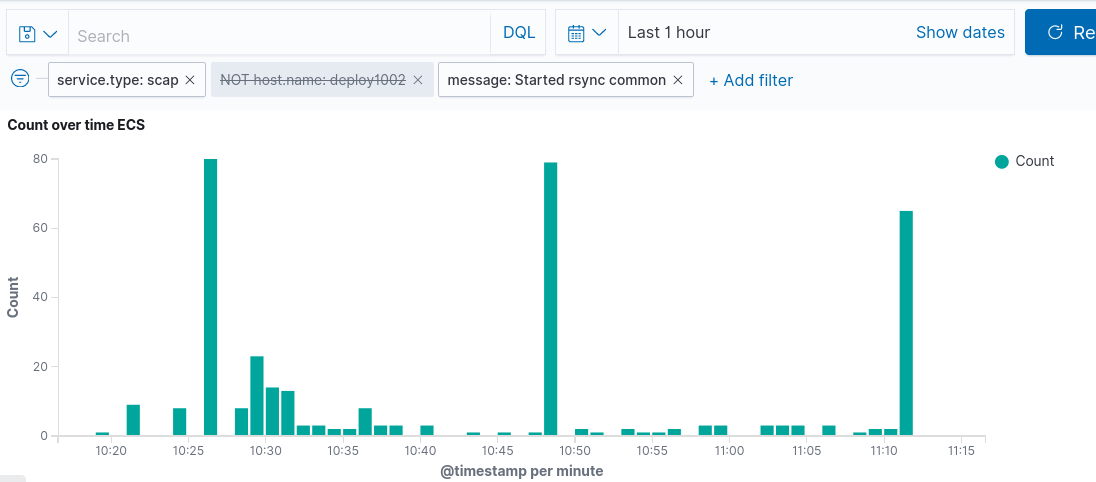

When running a preliminary scap sync to rebuild l10n cache, I noticed scap output was stuck on cdb rebuilds at 80%. Eventually I kept pressing enter which each time made the progress advance and eventually that flushed whatever queue was stall and the script progressed again:

2019-05-14T14:15:35 mwdebug1002 merge_cdb_updates Updated 417 CDB files(s) in /srv/mediawiki/php-1.34.0-wmf.5/cache/l10n

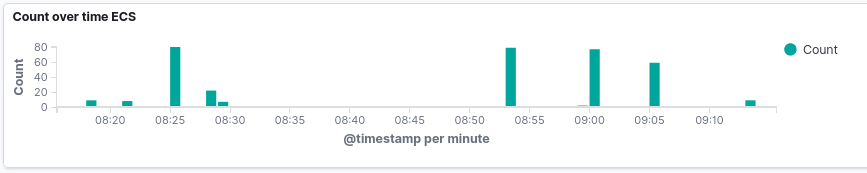

Half an hour without any log, then I guess I pressed ENTER and:

deploy1001 2019-05-14T14:47:23 Finished scap-cdb-rebuild (duration: 45m 10s) 2019-05-14T14:47:23 Started sync_wikiversions 2019-05-14T14:47:23 Compiled /srv/mediawiki-staging/wikiversions.json to /srv/mediawiki-staging/wikiversions.php