Background

Follow-up from T148442. In a nut shell:

SyntaxHighlight is a simple extension that uses the Pygments open-source project. It works "okay" one or twice on some page, and works "okay" when used many times on a popular and well-cached Wikipedia article.

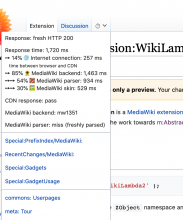

Its performance is terrible when it is used extensively on technical documentation pages due to each syntax highlight block adding a high cost slowing down the backend page parsing time. And because these pages are relatively unpopular (compared to Wikipedia) their readers are more often the first to visit such page after the cache expires, thus getting a fresh parse upon view.

Worse, there are pages that have become inaccessible due to timeouts (page parsing taking more than the 60 second limit), that power users may try to reload many times in the hopes that one random attempt will take less time and prime the cache for a few hours or days before it fails again.

Example: https://www.wikidata.org/wiki/Wikidata:SPARQL_query_service/queries/examples