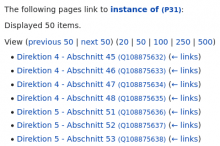

If you click on https://www.wikidata[.]org/w/index.php?title=Special%3AWhatLinksHere&target=Property%3AP31&namespace=1&invert=1 it'll take more than thirty seconds to load and at this rate can be simply turned into a DDoS attack vector.

It's already being used by users (and that's how I found it, from slow queries logs):

https://logstash.wikimedia.org/app/discover#/doc/logstash-*/logstash-mediawiki-2021.12.14?id=DoYeuH0B-N5J53KJweTL

The query:

SELECT page_id,page_namespace,page_title,rd_from,rd_fragment,page_is_redirect FROM (SELECT pl_from,rd_from,rd_fragment FROM `pagelinks` LEFT JOIN `redirect` ON ((rd_from = pl_from) AND rd_title = 'P31' AND rd_namespace = 120 AND (rd_interwiki = '' OR rd_interwiki IS NULL)) WHERE pl_namespace = 120 AND pl_title = 'P31' AND pl_from_namespace IN (0,2,3,4,5,6,7,8,9,10,11,12,13,14,15,120,121,122,123,146,147,640,641,828,829,1198,1199,2300,2301,2302,2303,2600) ORDER BY pl_from ASC LIMIT 102 ) `temp_backlink_range` JOIN `page` ON ((pl_from = page_id)) ORDER BY page_id ASC LIMIT 51

Explain:

*************************** 1. row ***************************

id: 1

select_type: PRIMARY

table: <derived2>

type: ALL

possible_keys: NULL

key: NULL

key_len: NULL

ref: NULL

rows: 102

Extra: Using filesort

*************************** 2. row ***************************

id: 1

select_type: PRIMARY

table: page

type: eq_ref

possible_keys: PRIMARY

key: PRIMARY

key_len: 4

ref: temp_backlink_range.pl_from

rows: 1

Extra:

*************************** 3. row ***************************

id: 2

select_type: DERIVED

table: pagelinks

type: range

possible_keys: pl_backlinks_namespace,pl_namespace

key: pl_backlinks_namespace

key_len: 265

ref: NULL

rows: 205355406

Extra: Using where; Using index; Using filesort

*************************** 4. row ***************************

id: 2

select_type: DERIVED

table: redirect

type: eq_ref

possible_keys: PRIMARY,rd_ns_title

key: PRIMARY

key_len: 4

ref: wikidatawiki.pagelinks.pl_from

rows: 1

Extra: Using where

4 rows in set (0.002 sec)

ERROR: No query specifiedIt tries to scan 200M rows! What makes it even more dangerous is that Special:Whatlinkshere is not among special pages I'm planning to put a cap on (T297708)