Summary

The results from the hover card A/B test (details below) suggest that the way the data is gathered might be incorrect. Mainly:

- link interactions (including hover-only interactions) significantly decrease for hover card group (3.71 vs 2.58)

- hover interactions strangely high for control group - large portion of users hovering for more than 500ms

- very few link clicks occur in the first 250ms for the experimental group

Details

For background, read the following:

T139319: Analyze results of Hovercards A/B test - Hungarian

T140485: Schema:Popups logs "dwelledButAbandoned" and link open events at the same time

This spike is a request to examine the code for Hovercards and the accompanying instrumentation (Schema:Popups) to potentially identify things in the code that could help explain, at least in part, seemingly unexpected data outcomes.

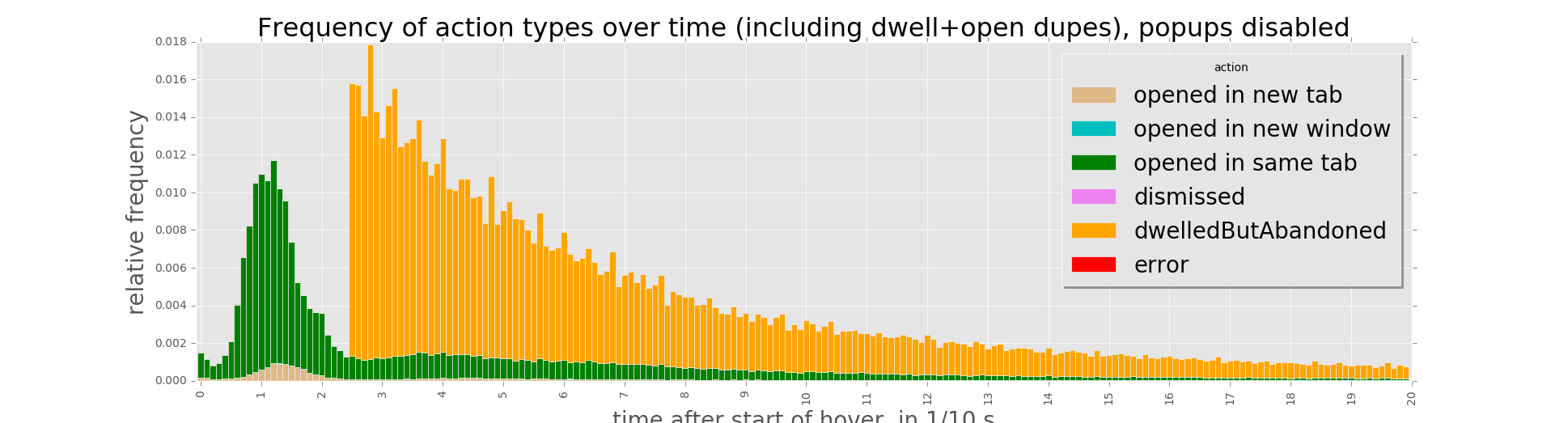

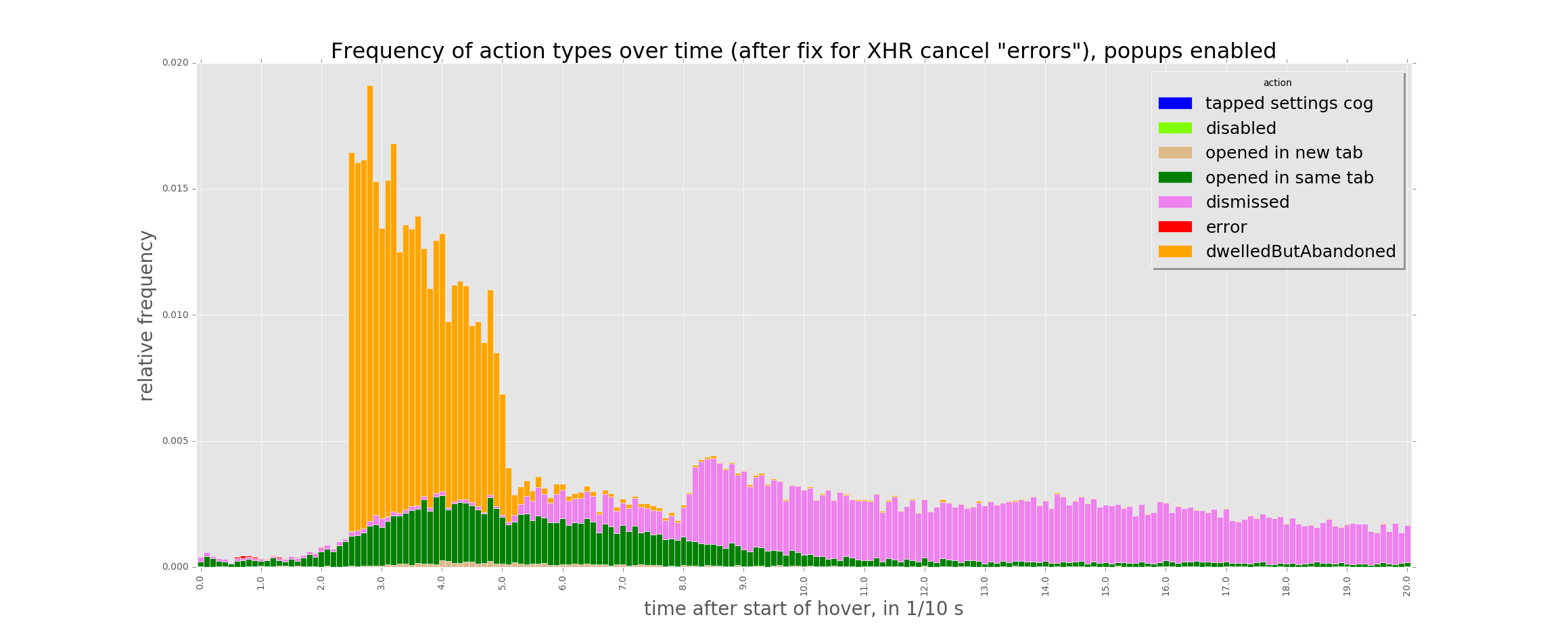

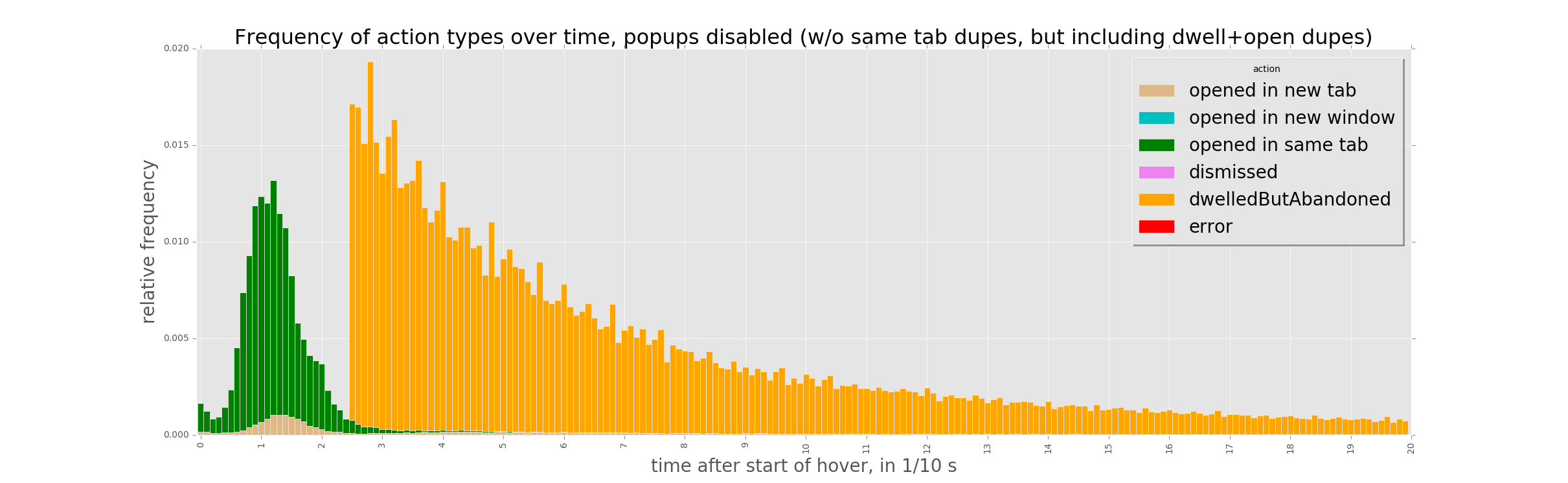

- In T139319#2475143, T139319#2481986, and T139319#2507540 it is noted that the concentration of clicks for Hovecards OFF (the control group) occurs with a lower interaction time than the concentration for Hovercards ON (the test group), and furthermore that the sheer percentage of early clicks is strikingly higher for the control group than the test group. Basically, we have so far failed to understand what happens to the green bump below 250ms in the control group: when Hovercards are switched on: (@Tbayer still plans to do some deduplication of events that will slightly correct the yellow area in the first chart, but this is highly unlikely influence this part of the data outcomes.) After various tests (T139319#2507540 ) it seems very unlikely that this difference is due to readers "learning" to chance their behavior due to the presence of Hovercards. Hence we are now focusing on the question: Is there something in the code that would cause this? Is the curve merely shifted due to a race condition? Are events potentially being dropped anywhere? Is there something else at play?

- As noted in T139319#2507559 duplicate events are being observed in the database. The specific observation here is about click events being more likely to be duplicated for the test groups' outlier usage scenaries, but more generally duplicates have been observed elsewhere. Is there anything that would explain these duplicate events? Is it possible to observe duplicated events at the client? Is there any way to guard against duplicate events? In which case(s) are duplicate events more likely to occur?

- Above 250ms as well, there appear to be fewer link interactions in general for the Hovercards ON (test group) case as opposed to the Hovercards OFF (control group). Is there a potential code reason why this might be the case? (n.b., this may be a behavioral difference attributable to the way users mouse around links, but that's a separate conversation.)

This spike is about looking at the client side instrumentation for general code correctness, potential race conditions, duplicate eventing, and so forth. It's okay to describe potential user behaviors and how events may manifest - indeed, that's necessary! - but theories about user learning and behavioral changes have been explored pretty well at this point.

This spike should result in diagnosis of the outcomes pertaining to the questions, and where issues can be rectified, tasks opened for fixes. As fixes, if they're required, guarantee better data quality outcomes in the second A/B test, they should be scheduled for the very next sprint and if time allows in this task's sprint, pulled into this sprint; maybe it will be so easy it can be done right on the spot within the spike time allotment - that would be ideal.

Browsers potentially to consider for exploration if trying to induce eventing:

- Desktop Firefox

- Desktop Chrome

- Desktop Safari

- Internet Explorer 11

- Edge

LTR/RTL may be worth exploration, but probably isn't a major component (the results so far have been for huwiki, which is LTR only). Traffic shaping the connection with a router, in-browser settings, on-device filtering, or a utility like Link Conditioner can be handy, too.